Introduction: Decoding Kubernetes and OpenShift

Progression of Platforms for Coordinating Containers

Peeling back the layers of Kubernetes and OpenShift's history is essential for grasifying their respective functions and applications. If we flashback to the infant stages of software creation, one would discover a naked deployment of applications onto physical servers. As transparent as it was, this method harbored several serpent-like headaches including stringent hardware restrictions, relentless hurdles in scaling, and politicking troubles in software compatibility.

In comes the knight in shining armor - virtualization technologies - alleviating some of these headaches. Physical servers could now moonlight as hosts for numerous virtual machines. However, it wasn't a silver bullet solution. The virtual machines turned out to be gluttons, rapidly draining resources, demonstrating lethargic startup times, and demanding Byzantine management practices.

The underdog of this saga, containers, evolved into a beacon of hope. Offering a more svelte substitute to virtual machines, containers enclosed applications and their dependencies into a package that illude consistent behavior across varying environments. However, when containers multiplied exponentially, often exceding the count of hundreds or thousands, the golden question emerged - how to manage them efficiently? Thus, the birth of container coordinating platforms like Kubernetes and OpenShift.

Kubernetes: A Beacon of Collective Innovation

Whispered in the tech streets as K8s, Kubernetes is an open-source platform engineered to streamline deployment, scaling, and governance of encapsulated applications. The coding brainpool of Google poured the foundation for Kubernetes, and presently, the Cloud Native Computing Foundation (CNCF) champions its advancement.

In a classic Kubernetes network setup, one control node acts as the conductor while several worker nodes play the symphony. The conductor oversees the orchestra's rhythm while the musicians bring to life the assigned tunes. Kubernetes constructs an abstract ecosystem, letting developers immerse in the melody of application logic, liberated from the entanglements of the underlying infrastructure complexities.

OpenShift: An Elite Version of Kubernetes

From the idea furnace of Red Hat comes OpenShift, a bouquet of container coordination software, with its roots firmly grounded in Kubernetes soil. Echoing all utilities from Kubernetes, OpenShift goes a step further to infuse bespoke features and upgrades aimed at corporate enterprises.

Features exclusive to OpenShift include an all-encompassing container catalog, avant-garde networking prowess, and an exhaustive web interface. OpenShift underscores security with refined mechanisms like precise role-oriented access control, seamless SELinux integration, and foolproof container isolation methodologies.

Kubernetes vs. OpenShift: A Cavalcade of Differences

When examined side-by-side, Kubernetes and OpenShift are laser-focused on distinct usage scenarios and target users. The open-sourced pliability and vivacious community of Kubernetes make it the crown jewel for developers and budding startups. On the flip side, OpenShift with its suite of corporate-tailored features and the backing of Red Hat's impeccable support, is a tantalizing prospect for the behemoth corporations wrestling with complex needs.

As part of our ensuing conversation, we will dissect the exclusive features, architectural setups, deployment blueprints, and governance policies of Kubernetes and OpenShift. Additionally, we will dive into their scalability, security robustness, integration potentials, and anticipative trajectory. Our purpose is to arm you with the critical acumen to pick the better-suited platform from these two titans.

Understanding Kubernetes: Key Concepts and Principles

Commonly known as K8s, Kubernetes has indisputably clinched its place as the leading orchestrator for intricate operations involving containers. Its charm emanates from its skilled methodology in simplifying complex deployment processes of applications, enabling scalability and enriching operational maneuverability. Kubernetes efficiently amalgamates various styles of container setups, laying a foundation for augmented productivity and strategic business regulation.

Delving Deep into the Realm of Kubernetes

An in-depth probe into Kubernetes discloses its extraordinary capability emanating from its architectural precision. Its structure fundamentally hinges on the client-server blueprint and hinges its core maneuvers around two significant nodes: the Command Center (previously termed the Control Plane) and the Execution Entities.

Digging deeper, the fundamental significance of the Command Center in the Kubernetes infrastructure becomes apparent. Acting as the main regulatory hub, it infuses several applications throughout the platform, monitors performance stats and skillfully administers an array of container clusters to uphold superior service standards. This node orchestrates elements such as the Kubernetes-api-server, etcd repository, Kubernetes-scheduler, leading controller, and the cloud-controller module.

On the contrary, Execution Entities amplify application procedures. Each Execution Entity incorporates a kubelet module that fosters uninterrupted communication with the Command Center. In sync with their specific container runtime, they instigate operations within the containers.

Illuminating the Architectural Backbone of Kubernetes

Kubernetes is engineered comprising several standalone units crucial for fostering its dynamism. These components comprise CStables (formerly Pods), Switch Points (previously Services), ValueStores (also termed Volumes), Partitions (earlier called Namespaces), and Constructs (once tagged Deployments).

- CStables: Existing as Kubernetes's core, CStables envelop autonomous containers, storage sectors, fresh network IPs, and other critical parameters associated with containers.

- Switch Points: Operating as the central communication link, Switch Points unite functional clusters of CStables, ensuring robust IPs and allocating DNS tags to induce consistent intra-CStable conversation.

- ValueStores: Mirroring file system platforms, ValueStores guarantee data persistence for containers nested within a CStable. Given Kubernetes' compatibility with different ValueStore types, it furnishes an assortment of data preservation choices across various CStables.

- Partitions: Functioning as virtual divisions within the platform, Partitions augment simultaneous processing, enabling numerous teams to perform tasks concurrently on the Kubernetes platform, warding off overlapping conflicts.

- Constructs: These components steer CStables and ReplicaSets to the desired condition, instigating the Constructs Controller to align the prevailing status with the designated target.

Grasping the Operational Mechanism of Kubernetes

Kubernetes adheres to certain cardinal norms:

- Declarative Configuration: Departing from the traditional sequential model, this methodology earmarks the final goal for Kubernetes, bypassing complex instructions about implementation techniques.

- Auto Scaling: Underscoring Kubernetes' flexibility, it automatically adjusts the number of CStables based on CPU utilization and distinctive application parameters.

- Auto Recovery: Kubernetes embeds an innate protection protocol that replaces defective containers, discards those out of compliance, and suspends services until complete corrections are implemented.

- Identification of Relationships and Load Balancing: Using specific DNS or IP labels, Kubernetes accurately signifies a container. It expertly handles a surge in container traffic by dispersing the network load, confirming a steady deployment configuration.

Understanding these basic concepts profoundly is essential to capitalize on Kubernetes' full potential. The upcoming segments will delve into OpenShift—a solution deeply contingent on Kubernetes' foundational principles, envisioned to bolster container orchestration capabilities further.

Introduction to OpenShift: The Next Generation of Kubernetes Deployment

OpenShift functions as a supplementary stage for the Kubernetes protocol, shoulder-mounted on the strength of Red Hat. Devised as a web-based Platform as a Service (PaaS), OpenShift delivers a securely engineered infrastructure that forms an ideal ground for software development. Imagine OpenShift as a streamlined variant of the well-revered Kubernetes - a master scheduler for container-centric operations- amplified with specialized functionalities and optimized user engagement tools.

A Detailed Inspection of OpenShift

OpenShift is a powerful platform, custom-crafted to cater to enterprise scale applications. It's structured around Docker modules which are regulated by Kubernetes, and fundamentally grounded on the solid bedrock of Red Hat Enterprise Linux. This setup enables an efficient, scalable framework for software's creative cycle and dissemination process in a shared cloud environment.

OpenShift offers a unified development landscape where developers can design and manage Docker-dependent containers in sync with Kubernetes. It comes equipped with an intricate Command Line Interface (CLI), aimed at developers fluent in terminal operations, while also providing a graphical interface for developers who favor visual-centric tasks.

OpenShift - An Advanced Version of Kubernetes

Just as different Linux distributions were branched out from their root Linux kernel, OpenShift is a customized rendition of Kubernetes. It enhances the rudimentary Kubernetes engine by augmenting it with advanced competency, a user-centric interface, a suite of development tools, and a reinforced security mechanism.

OpenShift races ahead of Kubernetes with its inherent compatibility for software source code. This allows developers to craft code within the platform itself, thereby streamlining the build and deployment journey.

The Architectural Anatomy of OpenShift

OpenShift's architectural plan pivots around 'pods'. Imagine a 'pod' as a specialized element, specifically architected for scheduling tasks in the Kubernetes environment. From a technical standpoint, a 'pod' is the tiniest deployable unit in a cluster, potentially hosting multiple containers. OpenShift further refines this concept by integrating deployment configurations to regulate the creation and roll-out of new pod versions.

OpenShift introduces the 'routes' concept, designed to expose services. Basically, routes are outward-facing hostnames that channel traffic towards the associated pod.

Navigating the Deployment and Management of OpenShift

OpenShift can be employed by setting up applications through the web console, CLI, or via an Integrated Development Environment (IDE), supporting various coding languages like Java, Node.js, Ruby, Python, and PHP.

One of the most appealing aspects of OpenShift is its management capabilities. It incorporates a unified console in sync with CLI for resource allocation, app status review, and scaling functions. Additionally, it houses a built-in logging and metric system that offers a comprehensive view of app functionality.

In essence, OpenShift refines the power and complexity of Kubernetes by integrating a suite of added features and improvements, aimed at enhancing user experience. It provides a sturdy, safe, and dynamically scalable architecture for executing and overseeing containerized apps.

The Basic Architecture: Kubernetes vs OpenShift

Evaluating the Functionality of OpenShift and Kubernetes

OpenShift and Kubernetes find themselves in the center of attention for container operation orchestration. They serve as transformational instruments, adept at demystifying complex procedures and transforming age-old practices of administering applications across various server environments. While they converge in their operational goals, each framework showcases distinct features, ease of use, and management strategies that shape their core fabric.

The Inner Workings of Kubernetes

Kubernetes has risen to fame due to its thoughtful platform that efficiently manages Linux containers. It primarily targets traditional app management and the scalability of applications housed within containers.

Depicting the structure of Kubernetes is akin to seeing an impeccably coordinated orchestra. Central to this metaphor is the main node, standing as the conductor of a symphony, which supervises key components of the cluster. These encompass host nodes, fluctuations in the application, and miscellaneous functions. It houses key aspects:

- API Server: This acts as the main conduit for REST actions, regulating the cluster's functions.

- Scheduler: Based on resource availability, this element assigns tasks to respective nodes.

- Controller Manager: Its role is to incessantly maintain the cluster's equilibrium.

- etcd: A widely accessible key-value storehouse holding all vital cluster data.

On the flip side, worker nodes carry out applications and specific tasks. They utilize a Kubelet for connectivity to the main node and Docker to deploy containers, ensuring a seamless operation.

Exploring OpenShift

OpenShift, a creation of tech pioneer Red Hat, is enriched with an assortment of software instruments that augment the power of processes based on containers. Run by Kubernetes, OpenShift delivers a fully integrated Platform as a Service (PaaS) solution, supercharged with Docker containers and aligned with Kubernetes.

OpenShift casts a wider net across applications compared to Kubernetes while offering a fundamentally more complex architectural layout. It amalgamates features from both main and team nodes, enhancing them with unique components such as:

- Router: The Router's task is to direct inbound network traffic to corresponding services within the cluster.

- Registry: A server-less yet scalable application, it holds and shares Docker images.

- Aggregated Logging: OpenShift employs a holistic EFK (Elasticsearch, Fluentd, and Kibana) stack for logging processes.

- Metrics: It utilizes Hawkular Metrics, Cassandra, and Heapster to aid in metrics gathering and visualization.

In addition, OpenShift differentiates itself from Kubernetes by introducing a browser-based administrative console in conjunction with a command-line interface.

Weighing OpenShift against Kubernetes: A Comparative Analysis

Whilst Kubernetes and OpenShift share identical foundational attributes, they display substantial contrast in their design and competencies. Kubernetes depicts a base platform that's designed to incorporate further enhancements for peak performance. Conversely, OpenShift is ready-to-go and readied for instant implementation, providing a broad range of functionalities.

Comparing the Deployment Models: Kubernetes and OpenShift

Two main orchestration tools stand out in the computing world, namely Kubernetes and OpenShift. While they might seem aesthetically similar, a critical assessment reveals compelling disparities.

Kubernetes was born as an initiative advocating open-source development. Functioning in an exceptional manner, it majorly concentrates on application supervision. It allows the operators to lay down the prospective condition of the system using either YAML or JSON, thus ensuring proficient resource allocation. The standout attribute of Kubernetes lies in its resilience against errors and its innovative ways of implementation. It permits fine-tuning at the pod-level, ensuring a smooth, uninterrupted system operation. If there are any obstacles during the implementation phase, it allows the restoration of the previous system state.

For illustration, this is a simple demonstration of Kubernetes installation:

In contrast, OpenShift, a product from Red Hat, broadens Kubernetes functionality. It cleverly incorporates both instructive and prescriptive methods into a unified model which broadens the sphere of influence over execution. Interesting features include its automatic execution signals that react to image configuration changes in the system catalog. OpenShift adds a unique twist by empowering users to control the implementation schedules.

Moreover, alongside an undo feature, it fosters personalized execution approaches that suit intricate configurations like blue-green or canary deployments.

Here is an example of a typical OpenShift configuration:

Delving into the methods they use for application deployment, one can discern the stark contrast between Kubernetes and OpenShift. Primarily, Kubernetes relies on a descriptive model, while OpenShift uses a blend of models for better authority and versatility. Both platforms, however, can manage rolling updates and reversions. Nevertheless, OpenShift goes a step further by handling more intricate deployment scenarios.

In conclusion, OpenShift appears more potent than Kubernetes due to its advanced features and versatility, thereby granting it the upper hand for complex deployment circumstances. However, choosing either Kubernetes or OpenShift will largely depend on the distinct project prerequisites, aims, and the present working situation.

Deep Dive into Kubernetes Deployment and Management

Molding Framework for Optimal Performance Utilizing Kubernetes

The efficiency of applications that depend on containers is in large part dependent on resilient foundations built using the Kubernetes-centric design methodology. As a powerful management software proficient in orchestrating tasks associated with containers, Kubernetes unveils a broad scope of possibilities. Our task is to navigate these diverse options with detailed precision for exploiting them to their fullest.

Fine-Tuning Kubernetes Deployment Mechanisms

To harness the benefits from your applications within Kubernetes' dynamic environment, exceptional precision during its deployment is a prerequisite. The chosen methodology here can be conveyed using JSON or YAML languages.

Here's a step-by-step guide that sheds light on structuring a Kubernetes compatible development environment using YAML:

By executing the aforementioned YAML file, you end up spawning three Nginx server systems. The replicas: 3 directive ensures Kubernetes will manage the availability of these three instances for the specified application.

Accelerating Application Onboarding

Equipped with powerful integrated functionalities, Kubernetes facilitates boosting your software through its suite of tools. The kubectl, a potent command-line utility, can significantly enhance the speed of application onboarding.

The following kubectl commands play a key role:

kubectl get deployments: Retrieves all active deployments in the current workspace.kubectl describe deployment <deployment name>: Displays extensive information about a specific deployment.kubectl rollout status deployment/<deployment name>: Present status of the deployment process in real-time.kubectl set image deployment/<deployment name> <container name>=<new image>: Assigns an updated image for a specific deployment.

Enhancing Deployment Outcomes

Kubernetes has been devised keeping in mind the requirements of applications, offering tailored deployment strategies equipped with real-time autoscale features highly influenced by parameters like CPU load.

To change the deployment scale, the command is as follows:

You can activate autoscaling with kubectl autoscale:

Protecting Deployment Updates and Empowering Rollback

When deployment constructs are modified, Kubernetes makes a seamless transition, deactivating older pods and enabling new ones. If these changes cause disruptions, a quick revert back to the previous setup can be activated.

Initiate an upgrade with kubectl set image and monitor its status using kubectl rollout status.

To revert changes, use kubectl rollout undo:

Supervision and Governance

Kubernetes comes with built-in functionalities to measure resource use and review logs. The kubectl top command provides extensive information about CPU and memory usage among different nodes and pods. kubectl logs can be used for detailed log scrutiny.

Ultimately, Kubernetes stands as a pivotal resource in the corporate framework with its vast array of managing, synchronizing, and governing features in a container-based context. Proffering a wide gamut of command utilities, scalability selections, and speedy rollback provisions, Kubernetes solidifies its worth as an invaluable asset.

Unpacking OpenShift Deployment and Management

OpenShift, a product of Red Hat, has become a prime solution in the realm of software, distinguished for its selection of optimized components. Its purpose is to segregate applications and subsequently refine and expedite the process of software development and usage. It benefits from the existing architecture of Kubernetes, extending its advantages by integrating purpose-built tools to support programmers and operations team.

This text delves deeper into the methods OpenShift employs to manage applications, outlining how it governs processes and shedding light on its distinct attributes and functionalities.

Process of Deploying Applications on OpenShift: A Detailed Guide

Deployment of an application on OpenShift starts by initiating essential elements like Pods, Services, and Routes. The initial step encompasses defining the attributes of your application in a YAML or JSON file. Once these files are prepared, they are navigated towards the OpenShift environment using the oc apply function.

The following demonstration underscores this progression:

Through this example, an application, your-app is introduced by creating three distinct replicas, each running in its own Pod. The Docker image employed for the application is tagged `your-app:1.0.0'. Its functionality is linked with port: 8080.

The Many Facets of Simplified Management with OpenShift

OpenShift devises a diverse array of management resources, explicitly for software developers and operations personnel. A combined solution of easy-to-use OpenShift console with proficient oc command-line functionality aims to permit users to skillfully direct the OpenShift environment.

The OpenShift Console delivers a visual representation of the network's working, revealing information like the operation of Pods, resource allocation, and any anomalies or alerts. The Console affords users the freedom to initiate, modify, or deactivate resources with an easy-to-navigate interface.

The oc command-line facility offers notable capacities for scripting and automation endeavors. It can surface all operations supported by the console, incorporating several additions. For instance, a command that retrieves a list of all active Pods can be executed like:

Dissecting the Unparalleled Features of OpenShift

OpenShift significantly improves the capabilities of Kubernetes with the inclusion of unique elements, designed to streamline deployment and management procedures. These improvements encompass:

- Source-to-Image (S2I): OpenShift presents the amalgam of tools and workflows known as S2I, capable of generating Docker images straight from the source code. With finesse, S2I channels source code into a Docker container and processes this code to yield ready-to-run images.

- Routes: OpenShift's choice of exposing HTTP and HTTPS routes is through Routes rather than the Kubernetes’ Ingress. These Routes also facilitate better management and bestow extra functions such as path-based routing and TLS termination.

- Developer Assistance Tools Bundle: Serving as a repository of developer support tools, OpenShift includes Jenkins for reliable Continuous Integration/Continuous Delivery (CI/CD), Eclipse Che for seamless cloud-based development, and CodeReady Workspaces for better teamwork among developers.

- Inherent Security Protocols and Adherence: OpenShift is equipped with an array of security features, also including Security Context Constraints (SCC). This distinctive plugin allows administrators the ability to oversee operations of a Pod and its use of resources.

To conclude, OpenShift significantly impacts the notion of segregated deployment and management. Its unique features, along with the sturdiness of Kubernetes' infrastructure, solidify its position as a preferred platform for organizations and individuals eager to embrace a progressive model of application development and deployment.

Kubernetes Deployment: A Step-by-Step Guide

Releasing software on Kubernetes demands specific actions anchored in an extensive comprehension of the structure of the platform and its numerous components. This manual demonstrates the method of introducing software on Kubernetes, taking you from the stage of prepping your surroundings to observing the implementation of your software.

Environment Preparation

Before getting started with the software's release on Kubernetes, the first step is to prepare your environment. This process entails downloading the command-line utility provided by Kubernetes, kubectl, and organizing a Kubernetes cluster.

- kubectl Download Process: kubectl, the Kubernetes' command-line utility, gives you the privilege to execute orders against Kubernetes clusters. For downloading it on your personal system, adhere to the guidelines given in the Kubernetes manual.

- Organizing a Kubernetes Cluster: A Kubernetes cluster is a systematic set of a minimum of one primary node and several worker nodes. For the purpose of development, you can organize a cluster on your personal system utilizing tools similar to Minikube or kind, or for purposes related to production, a cloud facility such as Google Kubernetes Engine (GKE) or Amazon Elastic Kubernetes Service (EKS) is an option.

Formulating a Deployment

After instating the environment, you are free to create a Kubernetes Deployment. A Deployment, a Kubernetes object, poses as a group of homogeneous Pods. It assures that a certain amount of Pods are operational all the time, making it perfectly suited for applications without state.

- Forming a Deployment Configuration Record: The configuration record, generally inscribed in YAML or JSON, outlines the desirable state of your Deployment. It designates the replica count, the Docker image to be used, and any application-critical environment variables or configuration data.

- Initiating the Deployment: To form the Deployment, you use the

kubectl applyexecutable. The executable reads the configuration record and formulates the corresponding Kubernetes objects.

Connecting Your Application

Post formulating Deployment, one needs to connect their application to the external world. Creating a Kubernetes Service is how one goes about it.

- Developing a Service Configuration Record: The Service configuration record depicts the desirable state of your Service. It lays out the service type (i.e., ClusterIP, NodePort, LoadBalancer), the port to be connected, and the selector to identify the Pods to be connected.

- Initiating the Service: You achieve creation of Service by using

kubectl apply. The executable reads the configuration record and creates the corresponding Kubernetes objects.

Supervising Your Application

Once your application is operational, the next stage is to observe its functioning and dissolve any issues arising. For this purpose, Kubernetes provides multiple handy tools.

- Employ kubectl Commands: Several kubectl orders can be employed to validate your Pods, Services, and Deployments' status and review records and events.

- Utilize the Kubernetes Dashboard: The Kubernetes Dashboard, a web-centric user interface, offers a graphic summary of your cluster and permits effective management and troubleshooting of your applications.

- Employ Supervising Instruments: Instruments such as Prometheus and Grafana can offer thorough measurements and visual representation of your application's performance.

In closing, launching software on Kubernetes demands prepping your environment, formulating a Deployment, connecting your application through a Service, and supervising the performance of your application. While this manual delivers a fundamental overview of this process, Kubernetes presents an extensive array of functionalities and alternatives for individualizing your deployments as per your exact requirement.

OpenShift Deployment: A Comprehensive Overview

Unraveling the Secrets of OpenShift's Structure

Created by the tech giant, Red Hat, OpenShift showcases the revolutionary innovations resultant from its container isolation properties. It stands tall with its functionalities fostered by Kubernetes, a platform known for deft navigation of densely packed container environments. OpenShift exhibits its prowess in expediting, streamlining, and harmonizing application software distributed across numerous digital spectrums. We endeavor to unveil the significant aspects, unique implementation strategies, and modalities of OpenShift in this discourse.

OpenShift's Architecture & Performance: An Examination

The varied and robust product range of OpenShift, conceptualized especially to power container-based applications, augments the primary utilities of Kubernetes. This enhancement stems from the integration of effective, purpose-built tools designed to lubricate and regulate software design, implementation, and the entire lifecycle.

OpenShift's anatomy can be dissected into crucial compartments:

- Master: It forms the crux of OpenShift's managerial elements such as the API server, the control center, and the etcd storage that ensures the relentless functioning of the system.

- Nodes: The mainstay group hosting software applications or containers forms the 'Nodes'. Every Node is supplemented with a kubelet facilitating the dialogue between the master and node, and a container runtime propelling the containers.

- Pods: The fundamental, deployable units within OpenShift are the pods, capable of managing several containers sharing storage and network resources.

- Services: Clusters of pods, such as an internet server and related database. They enhance load spreading and simplify service discovery.

- Routes: They outline pathways that enable external data to reciprocate with the services of the cluster.

Modifying OpenShift for Application Implementation

OpenShift dispenses a multitude of deployment alternatives, engineered to accommodate a broad range of technological requirements and predilections. These consist of:

- OpenShift Online: Under Red Hat's supervision, it serves as a apt cloud solution for independent developers and small teams to swiftly conceptualize and deploy software applications.

- OpenShift Dedicated: This bespoke offering furnishes perpetual clusters on the cloud, governed by Red Hat, and a is a fitting choice for expansive businesses needing substantial resources and swift administration.

- OpenShift Container Platform (OCP): Completely self-sufficient, OCP can be setup in-house or on a cloud, offering emphasized control over operations and adaptability. It's preferable for businesses with complex needs.

Activating an OpenShift-Enabled Application

Application deployment on OpenShift involves these stages:

- Establishing a Project: Corresponding to a standard namespace in OpenShift, yet enriched with supportive documentation, it offers a conducive workspace for developers focusing on particular software design.

- Formulating the Software's Blueprint: This entails contriving a configuration document (in YAML or JSON format) which delineates numerous software components like pods, services, and routes.

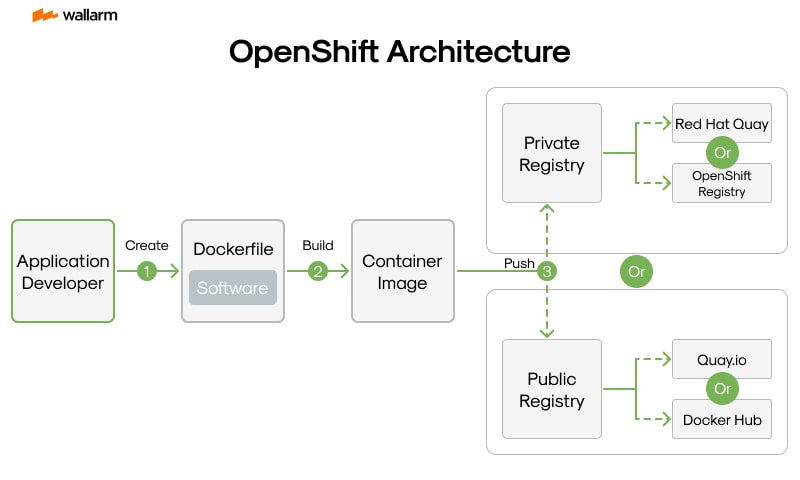

- Assembling the Software: OpenShift accommodates various build modalities such as Docker, Source-to-Image (S2I), or customized building process, resulting in a Docker image interpretation of the software, retained in a registry.

- Service Activation: Frame a DeploymentConfig object, designate the Docker image, and prescribe the deployment provisions. In response, OpenShift initiates a novel deployment, endorsing a modern replication controller and assigning a series of pods tasked with software application execution.

- Disclosing the Software: Configure a route connecting a domain name to the services corresponding to the software, thus enabling external data exchanges.

Steering OpenShift Operations

Managing an OpenShift cluster demands vigilance over the cluster's performance, scaling software amenability, handling system upgrades, patches, and troubleshooting. For effective administration, OpenShift amalgamates diverse tools and features:

- OpenShift Console: This feature-rich user interface displays a comprehensive visual of the cluster and its resources, assisting in project initiation, application implementation, and resource management.

- OpenShift CLI (oc): A competent command-line tool, it is convenient for scripting and task automation.

- Analytics & Logging: OpenShift joins forces with operational oversight and logging tools like Prometheus and Elasticsearch for a detailed review of cluster performance.

- Autoscaling: OpenShift introduces horizontal pod autoscaling that regulates the count of pod replicas based on CPU usage or other tailored metrics.

At its core, OpenShift serves as a holistic platform harmonizing and controlling containerized software lifecycle. It proficiently caters to an extensive range of requirements and use cases with its robust architecture and multitude of deployment alternatives. Its all-embracing administrative toolkit ensures efficient intra-cluster operations.

Management in Kubernetes: Essential Tools and Techniques

Breaking down the intricate workings of Kubernetes might seem akin to figuring out an enigma puzzle due to its elaborate structure. However, by performing a detailed analysis and objective evaluations, you can reveal its underpinning complexities. The key to mastering Kubernetes is through gaining a comprehensive understanding of its foundational tools and main functional mechanics.

Essential Tools for Kubernetes Mastery

There are three main categories of tools involved in becoming adept in Kubernetes: Concrete Command Codes, Graphic User Interfaces, and Exclusive Apps.

- Concrete Command Codes: Instruments such as

kubectlform the pillars for Kubernetes, facilitating effortless navigating in the Kubernetes setting. They amplify operators effectiveness by executing repetitive tasks, keeping track of the health of Kubernetes bundles, and controlling diverse Kubernetes elements via string command inputs. - Graphic User Interfaces: Current platforms provide visual depiction of the Kubernetes architecture, like the Kubernetes Dashboard. This integrated, internet-operated visualization tool from Kubernetes simplifies the task of controlling bundles and launching applications.

- Exclusive Apps: These apps, formulated by independent coders, extend Kubernetes's operational abilities. For instance, Helm is an all-inclusive package custodian for Kubernetes, interacting without hurdles with Prometheus, a valuable helper for managing app notifications and evaluating health condition.

Strategies for Improving Kubernetes Management

Attaining prowess in Kubernetes warrants the implementation of specialized, personalized tactics. These tactics cover:

- Blueprint Networking: The sustainability of a Kubernetes bundle primarily relies on meticulously devised design sketches. This involves designing an ideal network structure with planning documents and aligning them with the operational criteria of Kubernetes.

- Overseeing and Record Merging: Observing your Kubernetes bundle to track its mutating status and quantify its operation success is vital. Instruments like Prometheus for surveillance, Grafana for displaying data, and Fluentd and Elasticsearch for noting down data, are essential in this process.

- Efficiency Boosting: Kubernetes offers a variety of methods for app improvements from manually adjusting scale metrics, extending pods in width, to altering primary bundle settings.

- Minor Adjustments and Rollbacks: Kubernetes permits small app tweaks that importantly reduces downtime duration. If any inconsistencies appear, it introduces quick recoveries to former system configurations.

- Defensive Enhancements: Kubernetes integrates different elements like roles-based access governance (RBAC), Network Guidelines, and Pod Protective Measures to amp up bundle protection.

- Resource Sharing: Kubernetes assists in defining the resource division - processing strengths and storage - for each component of your app, guaranteeing a fair resource share among all apps in your bundle.

In a nutshell, becoming proficient in Kubernetes means strategically using a diverse set of crucial tools and specific tactics. Implementing these tools and tactics ensures a sturdy, safeguarded, and highly flexible Kubernetes bundle.

Effective Management in OpenShift: Tried and Tested Strategies

Utilizing Red Hat's OpenShift platform can greatly impact the successful uptake of your container technology needs. With Kubernetes firmly incorporated in its foundational structure, OpenShift brings potent tools to the table, tailored to bolster your developmental and operational tasks.

Primary Framework of OpenShift Control Structure

The core of OpenShift's control structure consists of four primary components: Container Gateway, Universal Outreach, Supreme Reflector, and Fusion Matrix. These distinct elements of OpenShift, working in harmony, drastically enhance the overall platform performance.

- Container Gateway: As a world-class platform-as-a-service (PaaS) solution, Container Gateway forms the basis for cooperative initiatives amidst challenging hardware settings, and accelerates the deployment process while enhancing developer workstreams.

- Universal Outreach: This integrative PaaS empowers developers to conceive, disseminate, and maintain applications across a multitude of public cloud locations.

- Supreme Reflector: Managed exclusively by Red Hat, Supreme Reflector's cluster schema grants a robust and flexible environment accessible to a plethora of users. This grants them substantial resources for developing, executing, and managing applications.

- Fusion Matrix: Focusing on continuously updating and finetuning cloud solutions, the Fusion Matrix offers an all-encompassing development arena.

Governing Principles of OpenShift Control Structure

Principle 1: Integrated Automation

A crucial part of OpenShift's command strength lies in its comprehensive automation. The Horizontal Pod Autoscaler continually reassigns resources according to an application’s predefined thresholds by altering pod counts in relation to CPU usage.

Principle 2: Extensive Developmental Tools

OpenShift comes equipped with an abundance of developer tools, like Jenkins for consistent integration and implementation. Moreover, application monitoring modules influence lifecycle management guidelines.

Principle 3: Streamlined User Interface

OpenShift provides a user-friendly platform that expedites the creation of applications. It also automate tasks such as application sorting and distribution. This enhances command proficiency and minimizes manual labour.

Principle 4: Robust Security Provisions

OpenShift implements advanced security features. These include Security-Enhanced Linux (SELinux), which imposes strict regulations on files and network ports to ensure unrivalled oversight.

Recommended Practices for OpenShift Management

- Configuring OpenShift: A thorough review of configurations contributes to heightening OpenShift's productivity, with factors like cluster schematization, foundational network settings, and storage constructs considered.

- Application Roll-out: Once configured, OpenShift assists in seamlessly introducing applications that utilize diverse architectures such as Java, Node.js, Python, and Ruby.

- Performance Evaluation & Reaction: OpenShift has advanced tools for performance measurement and response, supplying indicators to swiftly detect and resolve potential issues.

- Application Modification & Enhancement: OpenShift skillfully manages both manual and automated changes in applications, adapting to evolving needs.

- Implementing Security Measures: OpenShift has security elements like role-based access control, Secrets, and Service Accounts. Managing these security aspects judiciously is essential to secure both the platform and its hosted apps.

To conclude, operation of OpenShift successfully relies on seamless automation, a vast toolset for developers, a user-favouring environment, and unrivalled security procedures. This balanced merging of features solidifies OpenShift's efficiency and upholds its stature as a reliable infrastructure for large-scale application generation and delivery.

Visualizing Kubernetes and OpenShift: User Experience Comparison

Mastering the labyrinthine nature of guiding and controlling container systems becomes less of a puzzle when benefiting from intuitive and effective platforms. The dominant forces in this realm are Kubernetes and OpenShift, platforms known for their unique and potent interfaces common with a plethora of features and functionalities. This evaluation will delve into the user-centric attributes and simplicity of these platforms, shedding light on aspects like visual representation, user-focus, and operational ease.

Engaging with Kubernetes

Kubernetes, a vital cog in the machine of the open-source community, presents a dynamic command-line interface (CLI) termed as Kubectl. This robust tool eases application deployments and manages the lifecycle while outfitting users with capabilities to scrutinize, modify, and rebuild resources, logs, and processes.

Additionally, Kubernetes sports an online user interface, embedded within a gratifying dashboard. This interface empowers users to oversee the functioning state of a Kubernetes amalgamation. Regardless of the dashboard being user-centric and comprehensive, it necessitates users to acquaint themselves with Kubernetes nomenclature and constructs, creating a high entry barrier for novices due to the dashboard's extended control and versatility.

Merging with OpenShift

Shifting gears to the OpenShift's interface, it is celebrated for its user-centric approach. Showcasing a command-line tool and a web console that visually plots all present tasks and alterations within the assembly.

Unlike Kubernetes's emphasis on command-line interaction, OpenShift softens the learning slope through graphical illustration of Kubernetes components, and a visual interface for undisputed creation, modification, and deletion of elements.

The console also houses an extensive range of ready-to-roll apps and services. To amp up developer ease and productivity, OpenShift incorporates a plethora of developer tools directly into its console, manifesting a distinction that might tip the scales away from Kubernetes for some developers.

Comparative Analysis

User engagement experiences with Kubernetes and OpenShift might hinge on individual inclinations. To spotlight the disparities, let's consult the table below:

By providing a graphical user interface (GUI) that vividly showcases the cluster's health status, both Kubernetes and OpenShift validate their significance. However, OpenShift has an edge with a more comprehensive and user-centric UI that un-complicates projects and resource management.

Given the contemporary demands, OpenShift holts an advantage with its trouble-free interface and inbuilt developer tools, countering the harsh acclimatization curve of Kubernetes.

In summary, both Kubernetes and OpenShift have earned their stripes in the field of container orchestration platforms. Still, the intuitive design of OpenShift's interface appears as a perfect fit for those new to container orchestration. Meanwhile, Kubernetes remains a laudable choice for individuals aiming for in-depth control and flexibility.

Exploring the Role of Red Hat in Transforming Kubernetes into OpenShift

Red Hat, a vanguard in the realm of open-source innovation, has astutely transformed Kubernetes, culminating in the creation of OpenShift. This evolution, fuelled by Red Hat's unwavering commitment to providing powerful and reliable business solutions, enhances the operation of applications heavily reliant on container technology.

Red Hat: Nurturing the Growth of Kubernetes

Red Hat was instrumental in the initial establishment and subsequent growth of the Kubernetes project. Their innovation touched every facet of the project, including networking, storage, and application development. As a consequence of Red Hat's strategic involvement, Kubernetes witnessed significant enhancements in its reliability, scalability, and safety measures. Consequently, it has become a popular platform for managing applications that leverage container technology.

OpenShift - The Fruition of Red Hat's Vision

Charmed by the potential of Kubernetes, Red Hat sought to leverage it to serve enterprises beyond its original scope. This aspiration led to the birth of OpenShift - a feature-rich platform that unleashes the power of Kubernetes and supplements it with additional tools tailored to manage operations heavily reliant on container technology.

OpenShift elevates Kubernetes by offering an all-inclusive platform that integrates a container runtime, networking framework, observability suite and a refined developer workflow. This unified approach boosts management and oversight of applications heavily reliant on container technology, empowering corporations to maximize their adoption of container technology.

Key Features Infused into Kubernetes by Red Hat via OpenShift

Red Hat added numerous beneficial features to Kubernetes through OpenShift, specifically:

- Unified Developer Workflow: OpenShift ensures a smooth workflow for developers to expedite the iteration, deployment, and supervision of applications that heavily utilize container technology. This includes tools such as source-to-image (S2I), which streamlines the building and deployment of containers directly from the source code.

- Robust Security: OpenShift excels in security enhancements, the crown jewel being the enforcement of Security-Enhanced Linux (SELinux), a kernel-level access control measure that defends against unauthorized access and safeguards system integrity.

- Exceptional Networking: OpenShift offers superior networking capabilities by leveraging software-defined networking (SDN), which ensures a harmonized network fabric extendable across the entire cluster, bolstering security and scalability.

- Integrated Monitoring and Logging: OpenShift incorporates inherent observability and logging attributes, providing deep insights into the system's health and performance, aiding prompt identification and rectification of issues, and ensuring application reliability.

- Automated Updates: OpenShift adopts a self-updating mechanism, keeping the platform updated with the most recent features and security enhancements, freeing businesses from manual intervention.

Resolute Commitment of Red Hat Towards Kubernetes and OpenShift

Red Hat continues to contribute towards the advancement of Kubernetes while leading OpenShift's growth. The firm's unflinching faith in open-source guarantees that both Kubernetes and OpenShift remain cutting-edge contenders in the container tech industry, providing sturdy and expandable solutions to businesses for managing applications entrenched in container technology.

In essence, Red Hat has achieved a breakthrough in transforming Kubernetes into OpenShift, dramatically boosting Kubernetes' capabilities and tailoring it for enterprise-grade operations. Through OpenShift, Red Hat presents a unified, integrated platform that eases the manipulation of container-heavy applications, gaining noteworthy favor among many enterprises.

Scalability and Performance: Kubernetes vs OpenShift

In-depth Analysis: Scrutinizing Kubernetes and OpenShift

When it comes to container management and orchestration, Kubernetes and OpenShift are often in the spotlight due to their pivotal roles. This comprehensive analysis will dig into the core functions of these two influential orchestration giants and shine a light on their unique attributes and capacities.

Kubernetes and OpenShift's Approach to Scalability

In the intricate web of distributed systems, the scalability capacity of an orchestration platform springs from its ability to match growing organizational needs by efficiently controlling an enlarging array of containers.

Comprehensive Study of Kubernetes' Scalability

Kubernetes distinguishes itself by its impressive scalability potential, demonstrated by its expertise in managing massive clusters that could include up to 5000 nodes. Much of this credit goes to its master-slave architecture where the main node oversees the actions of various worker nodes. Each of these workers are capable of handling numerous pods, the smallest executable entities within Kubernetes.

Moreover, Kubernetes integrates automatic scalability to fluctuate the count of pods based on CPU usage. This characteristic enhances the system's ability to adapt to rising workloads, thereby minimizing manual interventions.

In-depth View of OpenShift's Scalability

OpenShift, while being built on Kubernetes' architecture, integrates its scalability characteristics yet goes a step further. It brings together both vertical and horizontal auto-scaling of pods, modifying not just their count but also reallocating their resources.

Another notable feature OpenShift introduces is 'Projects', aimed at facilitating collaborations between multiple users, significantly refining application management across different teams or sectors within a company.

Performance Comparison: Kubernetes vs OpenShift

In terms of container orchestration, performance illustrates an orchestrator's proficiency in efficiently managing and operating containers. This would involve resource conservation, speedy process rollouts, and expedient action.

Unraveling Kubernetes’ Performance Details

Kubernetes utilizes a declarative format which enables the end-users to stipulate a desired application state. The orchestration platform certifies that the prescribed stage is achieved, assuring optimized resource usage and accelerated deployments.

In addition, Kubernetes provides rolling update support, promising no-downtime during application updates – a critical feature for maintaining a reliable user experience.

Unraveling OpenShift’s Performance Metrics

OpenShift enhances Kubernetes’ performance indicators by a significant margin. Apart from adopting a similar description model for deployments and supporting rolling updates, OpenShift integrates service mesh and serverless technology strategies with CI/CD pipelines to boost application performance.

The bundled toolsets and interfaces in OpenShift simplify the application management process, saving considerable time and reducing effort.

Comparative Evaluation: Kubernetes vs OpenShift

In the consideration of scalability and performance dynamics, both Kubernetes and OpenShift exhibit robust attributes. Kubernetes proves to be the ideal fit for needs involving extensive cluster management, whereas OpenShift emerges as the first choice for users seeking an advanced toolkit with additional features to enhance scalability and performance. The final preference depends heavily on the specific requirements and the deployment scale of the organization.

Security in Kubernetes and OpenShift: Ensuring Your Infrastructure is Secure

Ensuring robust security measures in the world of software orchestration is paramount, prominently when it comes to Kubernetes and OpenShift. Although they are both firmly-equipped with robust security, they employ slightly differing ways to shield your system architecture. This passage decisively analyzes the security aspects of Kubernetes and OpenShift, detailing divergent features, strengths, and possible shortcomings.

Unveiling Kubernetes Security

As an open-source platform, Kubernetes offers foundational security features that can be escalated using additional modifications and standalone toolkits. Four pivotal components underpin Kubernetes' security:

- User Validation: Kubernetes utilizes tokens, passwords, and certificates to verify users. Additionally, it supports integration with other authentication systems like LDAP, SAML, and OAuth.

- User Privileges: Kubernetes utilizes Role-Based Access Control (RBAC) to allocate user permissions over resources and tasks.

- API Requests Screening: Kubernetes uses admission controllers to screen API server requests before solidifying these into the system, thus either allowing or thwarting the request based on pre-set rules.

- Pod Security Protocols: Inside Kubernetes, security parameters for individual pods can be fine-tuned, influencing their functionalities and controlling access.

The challenge in Kubernetes is its need for meticulous manual manipulation and acuity, which can pose difficulties for teams not sufficiently versed with Kubernetes.

Advancing to OpenShift Security

Alternatively, OpenShift augments Kubernetes' security. Built on Kubernetes, OpenShift incorporates Kubernetes' security paradigms and introduces advanced security augmentations:

- Security Constraints (SCCs): OpenShift deploys SCCs that provide a detailed framework as compared to Kubernetes' Pod Security Policies, allowing fine-tuned control over pod functionalities and authorized resources.

- Incorporated OAuth Server: OpenShift includes an in-house OAuth server that simplifies the process of authentication with diverse identity providers.

- Efficient TLS and Certificate Management: OpenShift streamline the management of TLS certificates, thereby easing the process of securing service communications.

- Image Verification and Regulation: OpenShift allows for the signing of container images and enforcement of signature usage, ensuring the validity of container images.

- Network Policy Administration: OpenShift, like Kubernetes, supports network policies that manage pod intercommunication. OpenShift, however, provides a more user-friendly experience while managing these policies.

Kubernetes and OpenShift: Security Assessment

To sum up, OpenShift and Kubernetes both exhibit strong security features. However, OpenShift excels in automating numerous security processes and offering comprehensive control over security layouts. Nonetheless, your preference between Kubernetes and OpenShift will depend on your specific requirements, resources, and knowledge base.

Decoding Integration Capabilities: Kubernetes and OpenShift

In implementing container orchestration, the ability to work in conjunction with other systems is a key factor to mull over. Kubernetes and OpenShift are two platforms that boast notable potential to work quietly in concert with a host of other tools. However, observe that the leverage and tilt provided by these potential integrations in each setup differs. This section of the text aims to provide a deep probe into the integration advantages of both Kubernetes and OpenShift, pitting them side-by-side, to serve as a compass, directing you to a well-informed decision.

Kubernetes: A Look into its Integration Capacity

Kubernetes is a standout, renowned for its open-source attribute and a sizeable coffer of augmenting features in the form of extensions and auxiliary tools. These complementary adjuncts spatter across a broad array of spheres, reaching into areas such as networking, storage, security, and monitoring landscapes.

- Networking Strengths: Leaning over the networking fence, Kubernetes welcomes the use of a number of plugins, boasting of big names in the industry such as Calico, Flannel, and Weave. They add muscle to Kubernetes' networking infrastructure, bestowing it with the ability to handle network policy regulation, load balancing, along with service exploration.

- Storage Diversity: The stretch of Kubernetes' arm in terms of storage systems is indeed versatile. It reaches forth into the cloud-based storage territory, embracing offerings from Amazon Web Services EBS, Azure Disk, and Google Cloud Platform Persistent Disk. It doesn't stop here, it also extends a hand to network storage systems like NFS and iSCSI.

- Security Strategy: Kubernetes plays ball easily with security-centered tools such as Aqua Security, Twistlock, and Sysdig. This cooperation behooves container scans, vulnerability management, and imposes protective barriers during runtime.

- Monitoring and Logging: When it's a matter of keeping tabs on system functions, Kubernetes deputizes Prometheus and Grafana for monitoring duties. Additionally, Fluentd and Logstash are conscripted for logging roles.

The YAML script above serves as a ready recipe for rolling out a Kubernetes deployment for the heavily favored monitoring tool, Prometheus.

OpenShift: An Exploration of its Integration Potential

Introduced and maintained by Red Hat, OpenShift comes pre-packed with a host of integrations that suit enterprise-level deployments. Moreover, it allows for bespoke integrations via Operators - a mechanism for building, triggering, and managing a Kubernetes application.

- Networking Stance: OpenShift relies on a proprietary software-defined networking solution tagged as OpenShift SDN. Additionally, it interfaces easily with SDN solutions offered by leading third-party providers, such as Nuage, Cisco, and Big Switch.

- Storage Facilitation: From a storage perspective, OpenShift facilitates a seamless marrying of its services with Red Hat's storage solutions, prominently GlusterFS and Ceph. Moreover, it advocates for any other storage setups that are, what can be termed, Container Storage Interface compatible (CSI).

- Security Scheme: Advanced security mechanisms are wired into the OpenShift's fabric, with tools like security context constraints (SCC), besides the possible marrying with Red Hat’s security solutions, like the Advanced Cluster Security designed with Kubernetes in mind.

- Monitoring and Logging: OpenShift brings several built-in surveillance tools to the table, presented notably by Prometheus. For logging purposes, it champions the Elasticsearch, Fluentd, and Kibana (EFK) stack.

Above is a YAML script, demonstrating a subscription to Red Hat's Advanced Cluster Security in OpenShift, fashioned specifically for Kubernetes.

The Battle of Integration Capabilities: Kubernetes vs OpenShift

In conclusion, both Kubernetes and OpenShift show off admirable integration capabilities. Spurning the rigidity, Kubernetes adopts a flexible approach embracing a wide selection of plugins and toolkits, while OpenShift stuns with its enterprise-ready integrations. Your ultimate choice, therefore, will pivot around your particular integration needs and the amount of customization your setup calls for.

Exploring Accessibility and Compatibility: Kubernetes vs OpenShift

In the realm of orchestrating container systems, factors such as straightforward access and software compatibility can greatly sway the decision between Kubernetes and OpenShift. Each platform brings to the table individual characteristics and functions, addressing various necessities and expectations. Let's closely examine the facets of accessibility and compatibility provided by Kubernetes and OpenShift to assist your judgement.

Accessibility: Comparing Kubernetes and OpenShift

Kubernetes, stemming from open-source roots, provides generous accessibility. This orchestrating platform can be fitted and function on almost every infrastructure, spanning from public clouds such as AWS, Google Cloud, and Azure to private clouds and even localized servers. Kubernetes' versatility garners the favor of entities with wide-ranging infrastructure requisites.

Conversely, OpenShift, birthed by Red Hat, delivers a more refined and intuitive experience. Although it possesses the capability of installation on multiple infrastructures, OpenShift encapsulates an all-encompassing development setting (IDE), comprising a web-centered console, command-line interface, and entwined developer apparatus. The user-friendly nature of OpenShift streamlines the process for teams that haven't extensively delved into container orchestration.

Compatibility: Kubernetes and OpenShift Compared

In matters of compatibility, Kubernetes shines with its expansive network. Catering to an array of container routines including Docker and containerd, it also synchronizes with a plethora of networking plugins, storage schemes, and service meshes. Kubernetes' adaptability equips it to cater to an assorted range of functional prerequisites.

Built over the roots of Kubernetes, OpenShift offers a more tailored user experience. Although it accommodates fewer container routines, primarily embracing CRI-O, the range of compatible networking plugins and storage schemes it supports is relatively narrow. Regardless, OpenShift meticulously oversees its compatibility parameters to ensure unfaltering stability and dependability, making it an apt selection for entities which rate these facets highly.

Conclusively, both Kubernetes and OpenShift have their individual merits and drawbacks concerning accessibility and compatibility. Kubernetes’ heightened flexibility and adaptability slots it as a suitable choice for entities possessing comprehensive and intricate needs. Conversely, OpenShift's rationalized and robust user experience establishes it as a preferred choice for teams which prioritize simplicity and dependability. Your specific prerequisites and operational demands will hugely influence your choice between the two.

Industrial Application: Use Cases of Kubernetes and OpenShift

As we evolve through the technological era, we witness the rise of container-based solutions such as Kubernetes and OpenShift. These solutions are beginning to take center stage as critical orchestrators in creating and deploying applications. Let's dissect their unique yet significant impacts on various industries.

Kubernetes: Infusing Progress into Tech Space

Kubernetes is a game changer, influencing app management, optimization, and initiation processes. A technological titan like Google uses Kubernetes as its secret-tool to oversee primary operations for its services such as Search and Gmail. The silent but poignant effectiveness of Kubernetes aids Google in efficiently monitoring trillions of container activities weekly while ensuring top-notch scalability.

IBM leverages Kubernetes to handle Watson, its advance information-responding mechanism. Given Watson's capability to decipher and respond to user questions in common language, Kubernetes capably manages the intricate distributed system foundation that Watson demands.

OpenShift: Prompting a Banking Revolution

In the realm of banking, OpenShift emerges as an effective tool with its exceptional defensive attributes and professional-level functionalities. A globally recognized investment bank like Barclays employs OpenShift to conceive and execute applications. Inclusion of OpenShift expedites Barclays’ app creation and functioning procedures while providing intensified security.

Similarly, OpenShift facilitates modulating the operational structure of KeyBank, a renowned US banking entity. It empowers KeyBank to design unique digital experiences for their patrons, which conveniently lessens operational complexity.

Kubernetes and OpenShift: Trailblazing Health Sector Advancements

The healthcare industry witnesses a surge in app control and deployment, and the credit goes to Kubernetes and OpenShift. Philips, an international leader in health equipment, deploys Kubernetes to manage its HealthSuite digital foundation. The consolidation of Kubernetes enables Philips' platform to handle the massive data flow from its devices.

Jointly, Boston Children's Hospital administers its computation-centric health informatics system aided by OpenShift. The platform aids the hospital in hastening the app conceptualization to realization journey, improving patient offerings and their treatment encounter.

Kubernetes: Supercharging E-Commerce Potentialities

E-commerce giants such as Shopify and eBay employ the prowess of Kubernetes to scale their comprehensive infrastructure. Their tactical incorporation of Kubernetes maintains operational stability even in the midst of sudden traffic surges during major sale events like Black Friday and Cyber Monday.

OpenShift: Enhancing Telecommunication Channels

Telecommunication powerhouses like Verizon and AT&T engage OpenShift to cater to their app conceptualization and deployment needs. OpenShift caters to these communication leaders for efficient app making and releasing procedures, amplifying their service range and customer engagement.

Kubernetes & OpenShift: A Brief Overview

In ending, Kubernetes and OpenShift are prevalent and flexible, observable in a variety of sectors. From effortlessly supervising trillions of containers weekly for Google to fast-tracking the digitization at Barclays, these platforms have left an unmatched legacy in the tech arena. Their accomplishments and industry-wide modifications testify to their immeasurable value and influence.

Future Trends: Kubernetes and OpenShift in the Next Decade

Looking forward, it seems inevitable that Kubernetes and OpenShift will maintain their importance in organizing and handling containers. The expansion of these platforms will mirror the changing requirements of various sectors, the swift advancement of technology, and the increased significance of formidable and adaptable security and scalability in our intensifying digital society.

Kubernetes: The Nerve Centre of Dual Cloud

Future tendencies hinting at Kubernetes affirming its role as the perfect instrument for container harmonization. A significant metamorphosis around Kubernetes' path is the escalating adoption of mixed cloud models.

Firms aiming to capitalize on both on-site and cloud-focused infrastructures will rely more heavily on Kubernetes for faultless amalgamation and governing of various environments. The innate adaptability and scalability of Kubernetes render it perfect for simplifying the complex deployment of dual cloud applications.

Additionally, planned advancements such as the incorporation of service mesh, serverless functions, and artificial intelligence features will further increase its flexibility and applicability.

OpenShift: Business-centric Kubernetes Approach

In contrast, OpenShift will continue to revolutionize the manner in which companies implement and supervise Kubernetes. OpenShift aims to boost the advantage corporations gain from Kubernetes with additional enhancements and tools.

A notable shift impacting OpenShift's path is the rising requirement for uncomplicated Kubernetes supervision. OpenShift's accessible interface and potent automation abilities make it a favourite choice for corporations desiring to fully utilize Kubernetes' capabilities without the complexity.

Projected improvements include broader multi-cluster management, support for edge computing, and bolstered security features for OpenShift. These will fortify its status as a leading business-focused Kubernetes platform.

Forecasts for Kubernetes and OpenShift

Infusing AI and Machine Learning

The integration of Artificial Intelligence (AI) and Machine Learning (ML) is a stable factor influencing the future of Kubernetes and OpenShift. These advanced technologies have the potential to significantly improve automation and perfect various elements of container coordination and management, from task scheduling to resource allocation.

Kubernetes is expected to use AI and ML to finetune its auto-scaling abilities, leading to superior resource usage and performance. Similarly, OpenShift could utilize these advanced technologies to enhance its automation capabilities, lessen reliance on manual controls and optimize operations.

Final Reflections

In summary, the forthcoming years promise exciting progress for Kubernetes and OpenShift. As they adapt to accommodate fluctuating business requirements, their influence on the future of container organisation and management is irrefutable. Whether you're a developer, a system administrator, or a business leader, staying informed of these tendencies can empower you to harness the potential of these transformative tools in the future.

Conclusion: Making the Informed Choice Between Kubernetes and OpenShift

Evaluating the front-running contenders in the container management sphere, Kubernetes and OpenShift unambiguously come out on top. The process of opting for one over the other isn't straightforward but demands a thorough study of the specific needs of your business and its developmental aims. Let's examine both in detail.

Kubernetes: The Unbeatable Protagonist in the Open Source Field

Clearly, Kubernetes has etched its leadership in the domain of container orchestration with its impressive functionalities and liberating options for developers. It boasts adaptability, shaping itself to mirror any enterprise's requirements. Moreover, being open-sourced promotes regular upgrades and enhancements, contributed by a worldwide developer community.

Nonetheless, Kubernetes isn't without hurdles. The flexibility that enhances its appeal also adds to its complexity, setting up a challenging learning path for those less versed in advanced DevOps strategies. Furthermore, Kubernetes might not be the quickest responder in facets like multi-tenancy and continuous integration and deployment (CI/CD), ensuing extra tools and setups.

OpenShift: The Comprehensive, Corporate-Grade Fix

Conversely, OpenShift, branching from the sturdy trunk of Kubernetes, presents itself as a solution that evades many drawbacks of Kubernetes. It offers a more organized and intuitive user interface, prepackaged with strong support for multi-tenancy, CI/CD, and additional crucial functionalities, rendering it a ready-to-launch tool.

OpenShift syncs flawlessly with other Red Hat products and professional aid, making it an appealing choice for businesses. But this convenience can attract a hefty price tag - possible high licensing charges and potentially limited flexibility compared to Kubernetes. Compliance with Red Hat's guidelines might also restrain access to the latest developments from the extensive Kubernetes community.

Arriving At Your Decision

Choosing between Kubernetes and OpenShift commands a deep comprehension of your company's needs, available instruments, and future projections. Key deliberations include:

- Abilities and Instruments: If your crew has developed DevOps skills and is equipped to negotiate Kubernetes intricacies, it can pave the way for an exceptionally flexible and responsive platform. However, OpenShift might be more suitable if your team prefers an uncomplicated workflow or lacks wide-ranging DevOps competencies.

- Financial Aspects: Although Kubernetes is open-sourced and initially free of cost, hidden charges linked to additional tools, setups, and likely rise in operational costs might surface. Conversely, while OpenShift has licensing fees, its ready-made solution could help curtail operational expenses.

- Business Ambitions: If your company seeks advanced options like multi-tenancy, inbuilt CI/CD, and professional help - OpenShift has the capacity to fulfill these.

- Breadth of Technology vs Consistency: If staying on the technological cutting-edge and having the liberty to modify and innovate is vital, Kubernetes might be your desired platform. However, OpenShift could be more enticing if you're after a more consistently stable platform with regular updates.

In summary, both Kubernetes and OpenShift offer powerful contours for container management, each carrying distinct perks that should be evaluated. The preference between the two should be a meticulously planned strategy, relying on the particular parameters and future aims of your firm. Gaining a rounded comprehension of each platform's distinct facets and aligning them with your requirements can lay the groundwork for proficient container orchestration.

FAQ

Subscribe for the latest news

.jpeg)