Introduction to Cloud Native CI/CD Pipelines

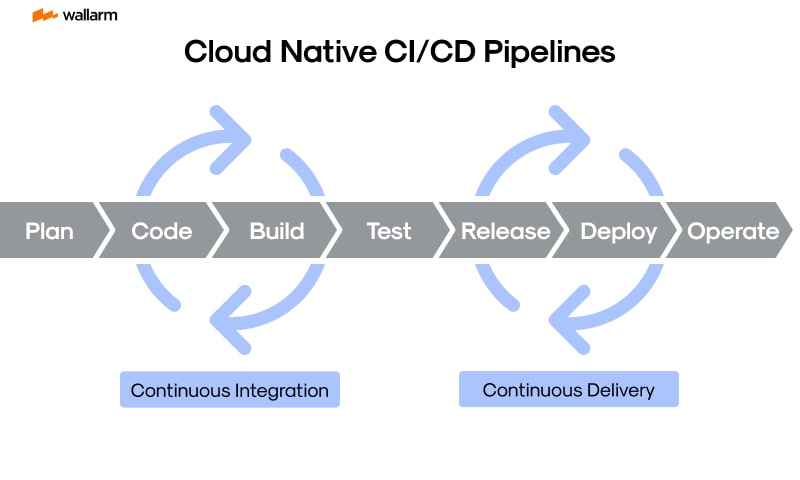

In today's dynamic landscape of software creation, the imperative nature of robust, expandable, and dependable Continuous Integration/Continuous Deployment (CI/CD) frameworks cannot be overstated. These assemblies form the vital scaffolding for contemporary DevOps methodologies, equipping coders with the ability to mechanize integration of code variations and roll out programs into live environments.

The impact of these frameworks gains even more weight when it comes to applications rooted in the cloud. Tailored for the cloud computing, such applications demand a CI/CD system that can adeptly oversee, scrutinise, and roll out these programs in a cloud setting. Hence, enter the arena of Cloud Native CI/CD frameworks.

Grasping the Concept of Cloud Native CI/CD Frameworks

Cloud Native CI/CD offerings are crafted keeping in mind the unique requirements of creating and rolling out cloud-centric applications. Utilizing the robustness of cloud technology, they provide a framework that is expandable, dependable, and optimized for operations.

These systems are typically divided into a few key phases, including:

- Software Asset Management (SAM): This is the platform wherein coders save and govern their codes. SAM acts as the trigger for the assembly line whenever fresh code modifications are introduced.

- Formation: Here, the program is fabricated into a conductible state. This could entail compiling the source code, encapsulating the application into a container, or fabricating serverless operations.

- Analysis: At this point, a variety of examinations are executed to ascertain the application's performance and make sure it is operating in the intended manner - this could incorporate component checks, compatibility checks, and workflow tests.

- Rollout: This final phase involves the application being rolled out into a live setting, which could be a distributed cloud, an in-house cloud, or a combination of both environments.

Why Cloud Native CI/CD Frameworks Are Crucial

Cloud Native CI/CD platforms bring with them a host of advantages. For starters, they enable coders to incorporate and deploy code modifications at a swift pace. Consequently, this allows faster roll-out of novel elements, bug rectifications, and enhancements to end-users.

Secondly, these systems play a crucial role in assuring the program’s quality. By automating the process of analysis, coders can identify and correct flaws earlier in the developmental proceedings, decreasing the potential of defects slipping into the live stages.

Third, Cloud Native CI/CD systems contribute to scalability. Harnessing the power of cloud technology allows these conduit systems to effortlessly expand for more extensive tasks, or contract when demand recedes.

Lastly, these frameworks foster a cooperative and open atmosphere. Automating the CI/CD system allows all team members to track code changes, tests, and rollouts, fostering heightened communication and cooperation.

In the subsequent sections, we will delve deeper into two renowned Cloud Native CI/CD assembly line tools, namely Tekton and Argo. We will explore their architectural designs, the nuances of setting up systems with each tool, their efficiency, their scalability, and aspects related to safety, along with real-life applications. Brace yourselves to delve further into these potent tools and how they can elevate your development and roll out procedures for applications rooted in the cloud.

Understanding Tekton in the Landscape of CI/CD Pipelines

Tekton emerges as an innovative open-source mechanism specifically developed for the foundation of agile and cloud-based CI/CD platforms. As a key project within the Continuous Delivery Foundation's realm, Tekton fosters seamless integration and cooperative functionality within the realm of CI/CD utilities, thanks to its inherent design on Kubernetes fundamentals, confirming its status as a bona fide Kubernetes-native platform benefitting thoroughly from Kubernetes' resilient and adaptable resources.

Integral Elements of Tekton

At the heart of Tekton's structure lie several primary modules constituting a reliable, scalable, CI/CD pipeline. Important facets include:

- Pipelines: Essential infrastructural elements of Tekton, these pipelines align tasks chronologically to achieve specific objectives like software development or web service deployment.

- Tasks: These are the elementary steps nested within a pipeline, conducted in a certain sequence. Tasks are recyclable across diverse pipelines, encouraging code repurposing and complexity reduction.

- PipelineResources: Functioning as input or output objects for tasks, PipelineResources include factors like Git repositories, Docker images, or cloud storage vessels.

- Triggers: These time or event-dependent factors launch a pipeline’s execution. Triggers could be prompted by various conditions like a Git push, a pull request, or a programmed time schedule.

Why Tekton's Kubernetes-Native Methodology Stands Out

The Kubernetes-native methodology underpinning Tekton presents multiple benefits. Firstly, it makes full use of Kubernetes' secure, flexible, and resilient features. Secondly, it facilitates seamless fusion with other Kubernetes-native applications and services. Thirdly, it ensures Tekton pipelines' adaptability across varied Kubernetes environments, affirming consistency and simplifying operations.

Tekton manifests its Kubernetes-native methodology through employing Kubernetes Custom Resource Definitions (CRDs) to depict pipelines, tasks, and other conceptual entities of the CI/CD arena. Thus, you can operate Tekton pipelines with the familiar Kubernetes command-line interface (kubectl) and API employed to control other Kubernetes resources.

Unleashing Tekton's Flexibility

Tekton boasts a valuable trait of adaptability. It features numerous extension points that enable you to tailor and add layers to its features per your requirement. It opens opportunity to define custom task kinds, introduce new forms of PipelineResources, or ally Tekton with other tools and services

Tekton’s Distinct Presence in the CI/CD Landscape

Within the field of CI/CD pipelines, Tekton stands apart with its Kubernetes-native architecture, adaptability, and emphasis on cooperative functionality. Tekton's design thrives in harmony with a wider CI/CD toolkit ecosystem, eschewing a cumbersome all-in-one approach. This renders Tekton a practical, modifiable solution for constructing cloud-oriented CI/CD pipelines.

Tekton's granular and adjustable perspective towards pipeline configuration and control sets it apart from other CI/CD solutions that often supply rigid preset pipeline models. Tekton provides you the power to model your pipeline based on specific needs and operations.

To sum up, Tekton establishes itself as a potent and adaptable framework for constructing cloud-native CI/CD pipelines, compellingly standing apart in the CI/CD landscape with its inherent Kubernetes-native design, adjustable capabilities, and cooperative functionality emphasis.

Navigating Argo: The New Player in CI/CD Pipelines

Argo has carved a notable place for itself in the realm of Continuous Integration/Continuous Deployment (CI/CD) tools, despite its relative novelty. Its rapidly growing user base owes much to its comprehensive feature set and effective exploitation of Kubernetes' capabilities. Argo excels particularly in devising complex workflows and pipelines.

Argo's Noteworthy Features

Argo is engineered to cater to the sophisticated requirements of adept DevOps teams. Here are some of its pivotal attributes:

- Workflows Tailored to Containers: Argo guarantees that each phase of the workflow corresponds distinctly to an individual container. This approach ensures superior scalability and adaptability, as each step's resource distribution can be tailored to its specific requirements.

- Support for DAGs and Action Sequences: Argo fully endorses Directed Acyclic Graphs (DAGs) alongside action chains. This means that Argo can construct multifaceted workflows incorporating parallel, serial executions, control structures, conditional constructs, and more.

- Triggering Workflows via Events: Argo effortlessly melds with your ongoing development practices by starting workflows as a reaction to occurrences. An instance of such an event could be making changes to a Git code repository.

- Web-Driven User Interface: Argo supplies a web-based UI, facilitating visual inspection of workflows and thereby making the overseeing and control process more intuitive.

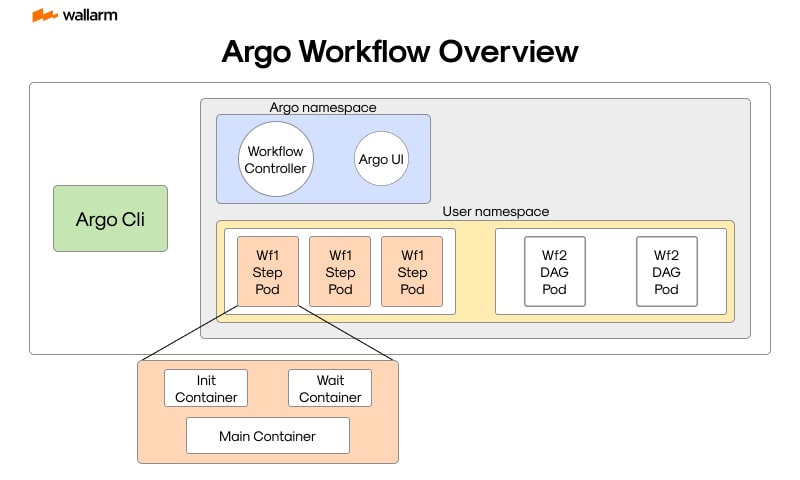

Diving Deep into Argo's Architecture

Argo's setup comprises three integral components:

- Argo Workflow Method: The main pillar of Argo, it offers tools for designing and launching workflows.

- Argo Event Control Mechanism: This portion provides mechanisms to trigger workflows in response to specified events.

- Argo CD Component: This unit is dedicated to Argo's continuous delivery process. It enables the recognition and monitoring of software applications, making sure your environment remains in line with your expectations.

These components, when combined, provide a comprehensive solution for managing CI/CD activities. Argo's architecture is designed to amplify stability and expandability by capitalizing on Kubernetes' features, thereby strengthening your pipelines to efficiently meet your software's demands.

Crafting an Argo Pipeline

To construct an Argo pipeline, a workflow must be represented in a YAML file. This document lists the tasks inclusive in the pipeline, suited containers for each task, and resource distribution for them. Here's a simplistic representation of an Argo workflow file:

In this case, the workflow comprises a single action that employs the cowsaycommand in the whalesay container. The generateName attribute secures a unique name for every workflow execution.

Argo's approach to workflow definition proves highly flexible. It allows you to adjust resources, append or discard steps, and devise intricate workflows with sequential and concurrent executions.

Argo distinguishes itself as a powerful apparatus for mastering CI/CD pipelines. Its container-centric model, coupled with powerful abilities and a malleable architecture, makes Argo a top contender for teams looking to harness the power of Kubernetes effectively.

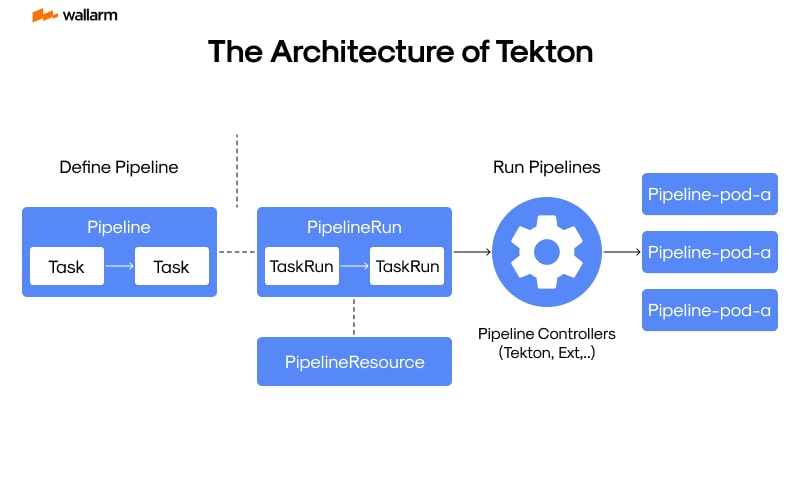

Technical Deep Dive: The Architecture of Tekton

Tekton reflects meticulous engineering, precisely tailored to cater to Kubernetes' complex architecture. It's a specialized tool designed to successfully establish and facilitate stalwart systems for Continuous Integration (CI) and Continuous Delivery (CD), perfectly aligned with cloud-centric applications. This article focuses on exploring Tekton's individualistic operating approach and characteristic structure.

Principal Constituents of Tekton

Tekton's infrastructure is erected on three pivotal elements: Mission Modules, Workflow Blueprints, and Workflow Assets:

- Mission Modules: Considered as the fundamental building blocks of Tekton's ecosystem, Mission Modules consist of a series of methodical steps. Every step synchronizes with a specific function that is destined for execution.

- Workflow Blueprints: Apt at outlining Mission Modules that need structured aggregation and implementation in a prearranged sequence, Workflow Blueprints act as the master plan for devising workflows, proficiently consolidating various mission modules.

- Workflow Assets: These quintessential elements are essential for bolstering the efficiency of the mission modules or generating valuable output. Included under this category are components ranging from Github repositories and Docker image references to Cloud-based storage utilities.

Tekton: Operational Methodology

Tekton's working paradigm revolves around Workflow Blueprints. A blueprint, in this context, points to an array of mission modules, executed sequentially to reach a desired goal. Tekton promotes the isolated development of each module in disparate pods, promoting safe functional isolation.

The procedure initiates with forming Mission Modules, which are then integrated into a Workflow Blueprint either consciously or driven by certain triggers. The active phase of the Workflow Blueprint gives rise to a Workflow Execution - a practical depiction of a Workflow Blueprint. The mission modules rooted within this Workflow Execution are implemented in a strategic manner, with one module's culmination igniting the initiation of the subsequent one.

Tekton’s Partnership with Kubernetes

Tekton leverages its vital integration with Kubernetes, expanding horizons within the CI/CD landscape by harnessing Kubernetes' benefits:

- Pods: Tekton exhibits a staunch commitment towards administering tasks within separate Kubernetes pods, hence, ensuring a secured and isolated functioning environment.

- Custom Resource Definitions (CRDs): Tekton judiciously utilizes Kubernetes' CRDs to design its distinctive facets such as Mission Modules, Workflow Blueprints, and Workflow Assets.

- Events: Event-based triggers internal to Kubernetes can activate Tekton workflow deployment, guaranteeing a seamless confluence between the CI/CD workflow and the Kubernetes architecture.

Advantages of Tekton

Tekton’s architectural schematic is hallmarked by versatility. The strategic use of CRDs enables developers to define and contour their resources, exponentially extending Tekton’s versatility quotient. This unique attribute echoes the system’s all-encompassing adaptability.

To sum up, Tekton is constructed to offer a thorough, flexible, and scalable solution for CI/CD. Its unblemished integration with Kubernetes and its scope for substantial enhancements underscore Tekton's effectiveness as a potent automation implement for application in any cloud-centric environment.

Exploring Argo's Infrastructure: Behind the Scenes

Argo is an impressive asset in cloud-native CI/CD pipelines' sphere, contributing a strong and adaptable foundation that can support various applications. In this segment, we will examine the mechanics of Argo, scrutinizing its structure, elements, and their interaction in creating smooth and proficient CI/CD pipelines.

Essential Structure of Argo

The structure of Argo is centered around the Kubernetes stage, employing its attributes to form a scalable and persistent CI/CD remedy. Few key elements of Argo's foundation encompass:

- Argo's Fine-tuning Mechanisms: This serves as the hub of Argo, taking accountability for structuring and executing mechanisms. It permits the configuration of intricate pipelines involving numerous stages, dependencies, and conditions.

- Argo's Event Codifiers: This aspect is responsible for event-prompted mechanism activation. It awaits definite events and activates mechanisms accordingly, cultivating automated pipeline implementation.

- Argo's Delivery Capability (Argo CD): This is Argo's tool for continuous delivery, conceptualized to dispatch applications to Kubernetes assortments. It certifies a match between the live application condition and the expected condition described in Git repositories.

- Argo’s Roll-Out Attribute: This element presents improved deployment schemes like canary and blue-green dispatches. It contributes to the management of new application versions rollouts, keeping downtime and risk at a minimum level.

Component Interconnections

Argo's elements collaborate to provide an all-inclusive CI/CD resolution. The mechanism element outlines the pipeline's progression, from code structuring to testing and deployment. The event codifiers watch for events such as code submissions or pull applications and set off the right mechanisms. The delivery capability then deploys the application to Kubernetes assortments, making sure that the live state aligns with the desired state. Lastly, the roll-out attribute controls the release of fresh versions, mitigating risk and downtime.

The Fine-tuning Engine of Argo

Argo's fine-tuning engine is a potent asset that enables intricate pipeline structures. The mechanism outlines are formed in YAML and can encompass numerous stages, dependencies, and conditions. Every stage in a mechanism is a container that executes a definitive task, such as building a code or administering tests. The stages can follow a sequence or operate simultaneously, and conditions can dictate the pipeline's direction.

Event-Prompted Technique of Argo

The event-prompted technique of Argo allows for automated pipeline operation. The event codifiers watch for definite events, like code submissions or pull applications, and set off the right mechanisms. This encourages immediate pipeline operation, guaranteeing rapid integration and deployment of code alterations.

Argo's Delivery Capability (Argo CD): Simplifying Constant Delivery

Argo CD, Argo's constant delivery tool, is intended to dispatch applications to Kubernetes assortments. It adopts a GitOps technique, where the expected condition of the application is described in a Git repository. Argo CD persistently oversees the repository and confirms that the live application condition matches the expected condition. This permits automated deployments and easy rollback should there be a problem.

The Roll-Out Attribute of Argo: Sophisticated Dispatch Strategies

Argo's roll-out attribute enables sophisticated dispatch schemes like canary and blue-green dispatches. Canary deployments slowly disperse fresh versions to a minimal user fraction, obtaining room for monitoring and testing before the actual deployment. Blue-green deployments maintain two application versions, alternating traffic among them to curtail downtime during deployments.

Summarizing, Argo offers a sturdy and adaptable remedy for cloud-native CI/CD pipelines, via its infrastructure. Its elements intertwine to generate automated pipeline operation, constant delivery, and innovative dispatch strategies, all drawn upon the Kubernetes platform, known for its scalability and resilience.

Setting up a Tekton Pipeline: A Step-By-Step Guide

Initiating a Tekton pipeline impels an orchestrated sequence of procedures, necessitating meticulous implementation. This instructional manual delineates these procedures for your comprehension, underscoring the significance of each stage within the framework.

Phase 1: Initiate Tekton Pipelines

The procedural sequence commences with the activation of Tekton Pipelines on your Kubernetes cluster. The kubectl command-line utility makes this feasible. The command below materializes the most current Tekton Pipelines version:

Phase 2: Authentication of Implementation

The inauguration of Tekton Pipelines needs validation to affirm successful establishment. Use the command indicated below to realize this:

On successful installation, anticipate active pods enlisted within the tekton-pipelines namespace.

Phase 3: Design Tasks

Advance to the stage of task layout for your pipeline's actions. Tekton's task is a compound of instructions enforceable in a defined sequence, encapsulated within a YAML file. An archetype of a rudimentary task design is demonstrated below:

Phase 4: Structure a Pipeline

Task blueprinting is succeeded by pipeline formulation. Tekton's pipeline integrates tasks to be smoothly processed in a precise sequence. Here's an illustration of a rudimentary pipeline compound, inclusive of the hello-world task:

Phase 5: Engage the Pipeline

The final stage prompts activating your pipeline, feasible through the generation of a PipelineRun object. Demonstrated below is an instance of a PipelineRun designed for hello-world-pipeline:

To materialize the PipelineRun, accumulate the YAML above within a file and apply through kubectl:

To oversee the PipelineRun's progression, invoke the following command:

Tekton pipeline establishment may initially appear intricate; however, grasping the fundamental concepts and the organized sequence of derived steps simplifies the process. The approach is to design your tasks and pipelines with precision, ensuring clarity and ordering in all activities. With this guide at your disposal, your first Tekton pipeline's commencement is well within reach.

Creating a Pipeline with Argo: Quick and Easy Ways

Introduction to Argo Pipelines

Argo, a cloud-based orchestrator engineering solution, facilitates parallel task orchestration on the Kubernetes platform. It has emerged as an instrumental asset for developing complex CI/CD pipelines, owed to its capability to handle tasks on a large scale. An Argo Pipeline is defined in a YAML file which ensures a simplified and clean interface.

Requirements for Argo Pipeline Creation

To set up an Argo Pipeline, you need to fulfill the following requirements:

- Be equipped with an Active Kubernetes cluster which forms the basis of the entire operation for Argo.

- Have access to the Argo CLI (Command Line Interface). This tool, which can be retrieved from Argo's GitHub page, is used for all Argo operations.

- Implement Argo onto your cluster - For this, Kubernetes’ Helm packaging service is needed.

Procedure to Establish an Argo Pipeline

With the prerequisites in place, the creation of an Argo Pipeline workflow follows:

1. Pipeline Definition: Craft your pipeline within a YAML file setting the sequence and actions your pipeline would undertake. Here’s a basic Argo pipeline blueprint:

2. Pipeline Activation: After drafting, implement the pipeline in Argo realm via the argo submit command. It kickstarts a fresh workflow in Kubernetes.

3. Pipeline Monitoring: Post launch, keep tabs on your pipeline's progress using the argo list and argo get commands. They fetch you the current status and an expansive report on all phases.

Ways to Optimize Argo Pipelines

While setting up pipelines in Argo is a direct process, some strategies can enhance their efficiency:

- Template Usage: Argo allows the use of templates to reduce repetitiveness. You may either adopt standalone templates or merge them in your pipeline's YAML document.

- Incorporate Error Management: Argo presents a host of measures for error handling. Appending retrial methods, error recovery, and backup alternatives can secure your procedures.

- Explore Argo Features: Acquaint yourself with the diverse features Argo provides such as data transmission controls between steps, data keeping/withdrawal artifacts, and conditional control of pipeline flow.

To summarize, Argo offers an indispensable support in fabricating CI/CD pipelines. It boasts of concurrent execution capacity, fault-tolerance mechanisms, and an array of exceptional functionalities, makes it a vital tool for teams striving to polish their deployment tactics.

Tekton vs Argo: Performance Analysis

In the realm of Cloud Native Continuous Integration/Continuous Deployment (CI/CD) Pipelines, optimizing performance is indispensable, as it directly affects your code's progression from development to execution stages. This analysis will scrutinize the performance details of two frontrunners in this domain: Tekton and Argo.

Probing Tekton's Performance

Tekton, an open-source technology built to function seamlessly with Kubernetes for developing CI/CD systems, stands out with its operational prowess. It runs every pipeline step within a separate container which facilitates unhindered execution and optimal use of resources.

The performance capacity of Tekton owes to several aspects:

- Scalability: Tekton's framework enables horizontal scaling that allows it to take on additional pipelines as workloads escalate, making it ideal for handling extensive projects.

- Concurrency: Tekton has mechanisms to execute tasks concurrently, which can drastically slash the time taken for pipeline execution.

- Effective Use of Resources: Tekton's methodology to isolate each pipeline step in distinct containers ensures resources are deployed only when required. This approach cuts down excessive usage and boosts overall performance.

Here's a typical Tekton task in code representation:

Evaluating Argo's Performance

Argo, contrastingly, functions as a Kubernetes-compatible workflow machinery built to handle extensive and intricate workflows. Its strength and impressive performance, particularly when managing sophisticated workflows, are commendable.

Noteworthy performance elements of Argo include:

- Parallel Execution: Argo can run tasks parallely, substantially minimizing the duration required to finish a workflow.

- Execution of Directed Acyclic Graph (DAG): Argo's functionality to execute DAG's eliminates inefficiencies in dealing with dependencies between tasks, enhancing the overall performance.

- Garbage Clearance: Argo's inbuilt garbage disposal feature helps in streamlining resources, thus sustaining high performance.

Here's a basic Argo workflow:

Tekton vs Argo: A Comparative Examination

When contrasting Tekton and Argo, the unique needs of your project should drive the decision. While both the tools ensure top-notch performance, they outshine in varied areas.

- Capability to Scale: Both Tekton and Argo boast impressive scalability. Still, Tekton's structure might be more favorable in situations where the pipeline count is set to surge substantially.

- Concurrent and Parallel Task Execution: Both these tools facilitate the concurrent and parallel execution of tasks. Yet, Argo's competency to execute DAGs ensures more efficient management of task dependencies.

- Optimal Resource Management: Tekton's method of segregating each step into separate containers can result in better resource allocation. At the same time, Argo’s inherent garbage collector contributes significantly to efficient resource control.

Summarily, both Tekton and Argo bring solid performance capabilities to the table. Choosing between the two hinges on the specific needs of your project, the complications anticipated in workflows, the expected burden on pipelines, and the necessities for resource administration.

Scalability Showdown: Tekton and Argo in Comparison

In the realm of Cloud Native CI/CD Pipelines, scalability is a critical factor to consider. It determines how well a system can handle increased workloads and adapt to growing demands. In this chapter, we will delve into a detailed comparison of the scalability of Tekton and Argo, two leading players in the CI/CD landscape.

Tekton: Scalability Features

Tekton, a Kubernetes-native open-source framework for creating CI/CD systems, is designed with scalability in mind. It leverages the inherent scalability of Kubernetes to handle increased workloads.

Tekton Pipelines are built as Kubernetes Custom Resource Definitions (CRDs), which means they can be scaled just like any other Kubernetes resource. This allows for the creation of multiple pipeline instances that can run concurrently, thereby handling increased workloads efficiently.

Moreover, Tekton's architecture is designed to be decoupled and composable. This means that each component of a Tekton pipeline can be scaled independently, providing granular control over resource allocation and usage.

Argo: Scalability Features

Argo, on the other hand, is a container-native workflow engine for orchestrating parallel jobs on Kubernetes. It is also designed with scalability as a core feature.

Argo Workflows can scale horizontally, meaning they can handle an increased number of tasks by distributing them across multiple nodes. This is achieved through the use of Kubernetes' Pod autoscaling feature, which automatically adjusts the number of Pods based on the current workload.

Argo also supports dynamic workflow parallelism, which allows for the execution of a variable number of parallel steps depending on the runtime data. This feature further enhances Argo's scalability by allowing it to adapt to changing workloads dynamically.

Tekton vs Argo: Scalability Comparison

When comparing the scalability of Tekton and Argo, it's important to consider the specific requirements of your CI/CD workflows. Both Tekton and Argo offer robust scalability features, but they approach scalability in slightly different ways.

Tekton's strength lies in its ability to scale vertically and independently scale each component of a pipeline. This provides a high degree of flexibility and control, making it well-suited for complex CI/CD workflows that require granular resource management.

Argo, on the other hand, excels in horizontal scaling and dynamic parallelism. This makes it particularly effective for workflows that involve a large number of parallel tasks and require the ability to adapt to changing workloads on the fly.

In conclusion, both Tekton and Argo offer robust scalability features that can handle increased workloads efficiently. The choice between the two will depend on the specific scalability requirements of your CI/CD workflows.

Security Aspects of Tekton and Argo Pipelines

In our exploration of secure practices involving Cloud Native CI/CD Pipelines, we are zooming into two key tools: Tekton and Argo. With security being non-negotiable in this field, both tools have implemented measures to ensure this. This section aims to dissect the security practices employed by both Tekton and Argo, laying out the advantages and drawbacks.

The Blueprint of Tekton's Security

Tekton has constructed its security mechanism around Kubernetes capabilities, tapping into its solid security measures. Utilizing Kubernetes' RBAC (Role-Based Access Control), Tekton furnishes administrators with the power to specify user permissions on distinct resources, thus establishing a shield against unauthorized usage or changes.

Furthermore, Tekton employs Kubernetes namespaces, offering each pipeline its personal space, fortifying it against vulnerabilities from a compromised pipeline. To augment this, Tekton applies container isolation when executing pipelines, reducing the potential impact of a security incident.

An outline of Tekton's security measures include:

- Implementation of RBAC

- Isolation through namespaces

- Protection via container isolation

However, a weakness in the Tekton's armor arises from its dependence on Kubernetes. Tekton's security prowess is bounded by the strength of your Kubernetes ecosystem. If neglected, your Kubernetes infrastructure could become the Achilles' heel in the security of your Tekton pipelines.

The Strategy of Argo's Security

Drawing parallels with Tekton, Argo capitalizes on the strength of Kubernetes' security provisions. It too, implements Kubernetes RBAC and namespaces for authorization and insulation, respectively. Yet, Argo’s security arrangement extends further.

Bolstering its security, Argo integrates SSO (Single Sign-On) allowing users to authenticate with their pre-existing credentials. This dual-action feature simplifies the login process and mitigates password-centric security risks.

Argo also introduces a secret vault for the management of sensitive data like API keys or passwords. This vault allows encrypted storage and controlled injection of secrets into your pipelines on demand.

Argo's security mechanisms encapsulate:

- Utilization of RBAC

- Isolation with namespaces

- Integration of SSO

- Secret storage and management

While these additional security layers amplify Argo's defence, they bring along the need for meticulous attention to setup and operation of your pipelines. Misconfiguration could potentially morph these helpful features into a negative, inviting vulnerabilities.

The Security Showdown: Tekton vs Argo

When evaluating the security fortress around Tekton and Argo, it's evident that both tools have constructed solid defences. They're both deeply rooted in the strong security bedrock of Kubernetes, promising secure CI/CD pipelines.

Still, Argo's extended security measures like SSO and confidentiality management edges Tekton out. These layers supply extra shielding and convenience, but they're tied to extra configuration and maintenance efforts.

Below is a comparative outlook of Tekton and Argo's security offerings:

In the final count, both Tekton and Argo have built noteworthy security measures for CI/CD pipelines. Your selection would depend on your unique security requirements and the level of complexity you're comfortable managing. Next up, let's examine the configurability of Tekton and Argo pipelines.

Configurability: Customizing Tekton Pipelines

Tekton, acting as a cloud-rooted CI/CD instrument, furnishes developers with expansive configurability, equipping them to mold their pipelines to perfectly match project-specific requirements. This section will venture into the diverse customization options available in Tekton pipelines, ranging from fine-tuning rudimentary settings to erecting intricate, sequential workflows.

Basic Structure and Settings of Tekton Pipelines

Tekton pipelines are constructed using YAML documents analogous to Kubernetes, providing immense adjustability. A pipeline is assembled from a succession of tasks, each symbolizing a distinct stage in the CI/CD progression. These tasks can be organized or amended to adapt to your project's unique demands.

An elementary representation of a Tekton pipeline configuration is as follows:

In this representation, the pipeline comprises of two tasks: 'compile' and 'verify'. The 'runAfter' attribute in the 'verify' task signifies its execution upon completion of the 'compile' task. While this is an elementary representation, Tekton pipelines could be much more involved, with various tasks operating simultaneously or in a predetermined sequence.

Advanced Customization: Utilizing Parameters and Resources

Tekton introduces numerous features for advanced pipeline customization. One of these is the incorporation of parameters. Parameters are variable entities that can be injected into tasks when the pipeline is run. They empower customization of tasks, allowing them to vary based on the demands of each run of the pipeline.

An example of a task adapted to use parameters looks like this:

Here, the 'compile-task' job invites a parameter dubbed 'compile-arg', which serves as an argument to the compile command. This empowers the compile command to be adjusted per pipeline run.

In addition, Tekton facilitates the use of resources. Resources symbolize task inputs and outputs, such as code repository or Docker images, which can be used to convey data between tasks in a pipeline.

A task that utilizes resources may look like this:

In this exhibit, the 'compile-task' task accepts a Git repo as input and produces a Docker image as its output. The pathways to these resources function as arguments to the compile command.

The Core of Tekton Pipeline Customization: Conclusion

Tekton's extensive configurability makes it a potent tool for building tailor-made CI/CD pipelines. By employing features like parameters and resources, pipelines can be molded to meet the idiosyncratic demands of your project. Tekton affords the versatility to erect a pipeline, be it a rudimentary two-stage one or an intricate multi-stage endeavor, that meets your project needs.

Flexibility in Argo: Modifying your Pipeline

Argo is an influential instrument in the territory of CI/CD passages, presenting additional configuration alternatives. This segment will maneuver through the assorted methods you can refashion your Argo passage to reflect your requirements. Whether your aim is to expedite your operations, boost productivity, or incorporate sophisticated tasks, Argo caters to your demands.

Refashioning Operation Patterns

One principle asset of Argo are its operation patterns, which cater to substantial versatility. These paradigms lay out the course your channel will take, which can be reformed to complement your unique requirements. Alter these paradigms to resequence the stages, incorporate novel ones, or excise superfluous ones.

To reconfigure an operation paradigm, gain entry to the Argo Operation UI and probe to the 'Operation Patterns' division and identify the paradigm you desire to alter. At this point, you can modify the YAML document directly, appending or excising stages where necessary.

Integrating Circumstantial Reasoning

Argo also provides for the incorporation of circumstantial reasoning in your passages. This permits you to enact certain phases only if particular prerequisites are fulfilled. This could be especially beneficial for complicated operations where not all stages are always necessary.

To integrate circumstantial reasoning in Argo necessitates appending 'when' conditions to your operation stages. An example is shown below where a phase executes only if the preceding phase, 'artifact-creation', was victorious:

Reshaping Resource Appointment

Argo enables you to reshape the resources allotted to each phase in your passage. This can aid in fine-tuning your passage's efficacy, particularly in cases where some stages demand more resources than others.

To alter resource appointment, you ought to append a 'resources' parameter to your stage definition, specifying the quantity of CPU and memory to assign. For instance:

In this scenario, the 'intense-task' phase is attributed 2 CPU cores and 1GB of memory.

Deploying Dynamic Indicators

A further method to inject flexibility into your Argo passages is through employing dynamic indicators. These indicators are indeterminate until execution, allowing the behavior of your passage to adapt based on the current scenario.

Dynamic indicators can be used diversely - relay data between stages, dictate your passage's progression, or customize individual stage's behavior. Indicators are identified with the 'withParam' parameter, as exemplified below:

In this case, the 'data-processing' phase employs the output from the 'data-creation' phase as a dynamic indicator.

To sum up, Argo proffers substantial flexibility when reshaping your passage. Be it refashioning operation paradigms, integrating circumstantial reasoning, reshaping resource appointment or deploying dynamic indicators, Argo supplies the necessary tools to construct a passage that mirrors your unique necessities.

Real-World Applications of Tekton and Argo

In the realm of cloud-native CI/CD pipelines, Tekton and Argo have emerged as two of the most promising tools. Both have been adopted by numerous organizations across various industries, demonstrating their versatility and robustness. This chapter will delve into the real-world applications of Tekton and Argo, showcasing how they have been utilized to streamline and optimize CI/CD processes.

Tekton in Action

Tekton has been widely adopted by organizations seeking to automate their software delivery processes. One such example is the global e-commerce giant, Shopify. Shopify has leveraged Tekton to create a platform-as-a-service (PaaS) for their development teams. This has allowed them to standardize their CI/CD pipelines across multiple teams, resulting in increased efficiency and consistency.

Another notable example is the open-source project, Jenkins X. Jenkins X has integrated Tekton as a core component of its Kubernetes-based CI/CD platform. This has enabled Jenkins X to provide a cloud-native, scalable, and highly configurable CI/CD solution.

The above code snippet illustrates a simplified version of a Tekton pipeline used at Shopify. The pipeline consists of three tasks: build, test, and deploy, which are executed sequentially.

Argo in the Real World

Argo, on the other hand, has been adopted by organizations like BlackRock and Intuit for their data-intensive workflows. BlackRock, a global investment management corporation, uses Argo to manage their machine learning workflows. This has enabled them to automate the training and deployment of their machine learning models, resulting in significant time savings and improved model accuracy.

Intuit, a financial software company, uses Argo to manage their ETL (Extract, Transform, Load) workflows. By leveraging Argo's ability to handle complex, data-intensive workflows, Intuit has been able to streamline their data processing tasks, leading to improved data quality and faster insights.

The above code snippet represents a simplified version of an Argo workflow used at Intuit. The workflow consists of three steps: extract, transform, and load, which are executed in sequence.

Comparing Tekton and Argo in Real-World Applications

As seen from the table, both Tekton and Argo have found their niches in different types of workflows. Tekton shines in automating and standardizing CI/CD pipelines, while Argo excels in managing complex, data-intensive workflows. Both tools have proven their worth in real-world applications, demonstrating their robustness and versatility.

Case Study: Successful Tekton Implementation

In the ecosystem of cloud-first deployment-flow systems, Tekton emerges as an influential instrument, embraced successively by a multitude of enterprises. This investigative report exhibits the effective utilization of Tekton by a premier entity in the realm of software crafting, herein referred to as 'Entity Z'.

Obstacle Identified by Entity Z

As an international figurehead in software engineering, Entity Z was dealing with a convoluted and splintered deployment-flow system. Boasting a diverse tech arsenal and multiple crews engaged in varied projects, the legacy workflow-system proved progressively problematic, lacking the elasticity and expansive capabilities necessary to cater to the ever-evolving demands of the entity.

Initiating Tekton Measures

Deciding to capitalize on the benefits of Tekton for their deployment-flow system, Entity Z adopted its cloud-centric strategy. This move brought the expansive and flexible attributes the entity needed at its disposal, enabling them to dictate and govern workflow systems via code, simplifying version regulation and workflow duplications.

Progression of Tekton Measures

Tekton's installment was sequentially executed in chunks. Initially setting up a singular Tekton workflow for a lone project served as a test trial to get a grasp on Tekton's intricate workings while training the crew.

The preceding code illustrates a fundamental Tekton workflow for a 'Greet World' task, symbolizing the premier workflow developed by Entity Z as part of their trial expedition. The next step involved expanding the Tekton measures to envelop additional projects, executed cautiously while the team acclimatized with each new task.

Significant Outcomes

Adopting Tekton catapulted noteworthy enhancements in Entity Z's workflow mechanisms, outlining a few consequential outcomes:

- Augmented Productivity: Tekton workflows proved speedier and more productive than prior deployment systems, facilitating rapid deployments and curtailing non-operational periods.

- Expanded Scalability: Riding on Tekton's cloud-centric strategy, Entity Z could enhance their workflow system to accommodate an increasing number of projects and teams.

- Simplified Governance: Utilizing Tekton, workflows were governed via code, streamlining regulation, versioning, and duplication of workflows.

- Cost-Efficiency: Shortening deployment periods and enhancing productivity went hand-in-hand with Tekton, saving Entity Z from excessive operational charges.

Gained Knowledge

Entity Z's triumphant attainment of Tekton manifest essential lessons for other similar entities considering Tekton adoption:

- Gradual Initiation: Instituting Tekton progressively, starting with a trial, aids teams in comprehending the tool's functioning, easing the path for extensive rollouts.

- Training: Adequate instruction is vital for the successful usage of Tekton, inclusive of understanding Tekton's structural build, workflow initiation, and problem-solving.

- Constant Enhancement: Tekton, while being an influential instrument, demands ceaseless learning and enhancements. Regularly scrutinizing and enhancing Tekton workflows lead to better efficiencies.

Summing up, Entity Z's victorious deployment of Tekton emphasizes the tool's potential in crafting efficacious, expansive, and governable deployment-flow systems. This showcases the competency of Tekton in the sphere of cloud-first deployment-flow systems.

Case Study: How Argo Leveled Up a Business

In the fluid universe of programming advancement, organizations are perpetually exploring methods to polish their operations and heighten productivity. An eminent online retail platform found itself ensnared in issues with its existing Continuous Integration/Continuous Deployment (CI/CD) pipeline. The platform was depending on a conventional CI/CD pipeline, which was not just intricate and resource-draining but was also deficient in adaptability and potential to expand synchronously with the breakneck advancement speed. This is where Argo emerged as a lifesaver, revolutionizing the firm’s software management lifecycle.

Confronting the Difficulty

The online retail establishment was grappling with numerous predicaments. The CI/CD pipeline in operation was trudging and inefficacious to expand according to the burgeoning demands of the enterprise. Constructed on a monolithic framework, the pipeline proved to be an uphill task to command and upgrade. The void of flexibility implied that developers had to engage a substantial stint handling the pipeline rather than dedicating their skillsets to programming and ideating innovative solutions.

The Astounding Argo Remedy

Argo, via its cloud-oriented methodology, presented a remedy which was both scalable and adaptable. Argo's defined and Kubernetes-oriented workflows enabled the firm to guide their CI/CD pipeline in a superior manner.

Streamlined Workflows

Through Argo's trigger-based approach, the firm could computerize their deployment proceedings. This enabled the coders to amplify their focus on programming, while Argo managed the deployment aspect. The burden of pipeline management was considerably reduced, saving precious time.

Capaciousness

Thanks to Argo's Kubernetes-grounded structure, the firm had the capacity to augment their CI/CD pipeline according to their necessities. The platform could accommodate high-volume deployment sans significant surge in resource requisition.

Pliability

With Argo's well-defined workflows, the firm was equipped to customize the pipeline complying with their unique demands. It meant the enterprise could tweak their pipeline according to changing business requirements with minimal effort.

Magnificent Outcomes

Interfacing Argo escorted a massive advancement in the firm's software development cycle. The platform managed to slash the pipeline management time by half, permitting coders to invest more time in coding and formulating innovative solutions. Also, the platform was capable of administering voluminous deployments without stretching resources.

The employment of Argo further resulted in enhanced reliability and solidity of the CI/CD pipeline. The platform witnessed a decline in deployment failure incidents leading to enhanced productivity and cost-effectiveness.

Wrapping Up

The journey of this online retail platform unravels the potential of Argo in inducing transformations. By capitalizing on Argo's cloud-oriented methodology, the firm rescaled their CI/CD pipeline, yielding enhanced efficiency, scalability, and adaptability. This scenario illustrates a powerful exemplar of how enterprises can gain from incorporating Argo into their CI/CD pipelines.

Troubleshooting Common Issues in Tekton Pipelines

While Tekton boasts robust functionalities for crafting cloud-native CI/CD pipelines, you may bump into a few hurdles along the way. In this section, we'll journey through some frequent obstacles encircled by Tekton pipeline use and present convenient ways to navigate them.

Issue 1: PipelineRun Activation Hurdle

Facing issues with the beginning of PipelineRun? This common inconvenience could sprout from multiple roots: a flawed setup or depleted resources.

Solution: Peek into the PipelineRun's health using tkn CLI tool. Unearth potential discrepancies in the logs if you encounter a Failed status. Validating the availability and proper setup of necessary resources may aid in resolving this issue. Should this trouble linger, consider re-establishing your PipelineRun.

Issue 2: TaskRun Breakdown

TaskRuns are integral to a Tekton pipeline; their malfunction could jeopardize the whole assembly line.

Solution: Much like the above hurdle, start by checking the TaskRun's state. If it reflects Failed, the logs might contain some compelling leads. A slight error in the Task blueprint or scarcity of the required resources may be the culprits here. Verify the Task's blueprint correctness and the presence of mandatory resources.

Issue 3: Hitting Resource Ceiling

It could be a challenging situation when Tekton pipelines stumble upon resource constraints. This could happen if the available resources in the cluster are insufficient for flawless pipeline execution, or if the set resource caps for the pipeline are unrealistically low.

Solution: Leverage Kubernetes' monitoring arsenal to keep tabs on your pipeline's resource consumption. If you regularly hit the resource cap, consider ramping up the limits or upgrading your cluster.

Issue 4: Clashes with Tekton Iterations

Tekton experiences constant innovation cycles, rolling out new iterations regularly. You might find your pipelines, crafted with older Tekton versions, struggling for compatibility with newer releases.

Solution: Make sure your Tekton pipelines aren't mismatched with the Tekton version running on your cluster. Whenever you upgrade Tekton, run your pipelines against the latest release before migrating it to your live environment.

Issue 5: Network Intricacies

Tekton pipelines might flounder due to network issues, particularly during image retrievals from remote repositories or connecting with external services.

Solution: Scrutinize the network framework of your cluster for flawless accessibility of requisite external services. If you're retrieving images from a private repository, validate the credentials' correctness.

To wrap up, Tekton, despite its hitches, proves instrumental in fabricating CI/CD pipelines. By applying tactful setup strategies and persistent monitoring, you can cap these issues effectively. Always match your Tekton setup and pipelines with the latest updates and keep a close watch on your pipelines to detect and resolve any snags before they escalate.

Solving Potential Problems in Argo Pipelines

Arggo Pipelining, notwithstanding its resilience and capabilities, tends to face related hiccups. Here, we address the ways to counter some common hindrances affecting Arggo Pipelining.

Problem 1: Failure in Pipelining Activities

A diverting hurdle encountered frequently, pipelining failure could be the outcome of myriad factors varying from inappropriate settings to limited resources or compromised network connections.

The quick fix is analyzing the pipelining logs. Arggo manifests comprehensive logs facilitating the detection of the failure's genesis. Depending on the log results, find and rectify any configuration inconsistencies, assign additional resources to your pipeline setup via modifying the resource caps, or fortify your network accessibility by fine-tuning firewall criteria or reshaping network protocols.

Problem 2: Absent or Incorrect Data Production

Absence or wrong data released from the piping tasks is another common issue. This could arise from flawed pipelining tasks setup or blunders in the implemented coding.

To target this, validate the configuration of the task and the definition of output parameters. If the setup is correct, scrutinize the implemented code to spot any errors.

Problem 3: Pipelining Stuck in 'Awaiting' Stage

The pipelining might occasionally stall at the awaiting stage related to resources availability or any objection with the Kubernetes scheduler.

The initial step is to audit the readiness of the resources needed for the pipeline. Resource inavailability would require resource liberation or modification of resource requests in your pipeline formation. If resources are accessible and the pipeline is still suspended, check the scheduler logs for faults.

Problem 4: Control Access Hurdles

Arggo pipelines might also fall victim to access control complications. These generally arise when the pipeline lacks necessary permissions for specific operations.

Addressing this requires examining and validating the permissions granted to the pipeline. Subsequently, ensure they are ample. You might necessitate adjusting role-based access control (RBAC) settings in your Kubernetes cluster.

Problem 5: Compatibility Issues

Incompatibility issues with the Kubernetes version used can also become an impediment for Arianna Pipelines.

The way out of this is to ensure the Kubernetes version in use is in accord with the Argo version. If not, an update or downgrade of your Kubernetes version or switching to a compatible Argo version might resolve the issue.

In essence, the challenges associated with Arianna Pipeling are surmountable. A thorough knowledge of the potential problems coupled with effective solutions ensures seamless pipeline execution.

Tekton vs Argo: The Verdict on CI/CD Pipelines

The examination of cloud-centric continuous integration and continuous deployment (CI/CD) pipelines often narrows down to Tekton or Argo. Despite sharing similarities in core functionalities, they differ in several crucial aspects. This discussion will conduct an in-depth contrasting assessment of these two tools, offering a judgment considering parameters like user-friendliness, scalability, robustness of security, and practical applications.

User-Friendly Nature

When scrutinizing user-friendliness, both Tekton and Argo have crafted their interfaces with a user-centric approach. Nonetheless, Tekton holds an upper hand with a more simplistic, easy-to-understand layout favoring new adopters. Conversely, Argo's slightly intricate interface commands a higher learning effort.

Expandability

When handling project dimensions, both Tekton and Argo demonstrate profound expandability. They exhibit adeptness in handling extensive projects, with abilities to scale down or up per project requirements. Tekton pulls ahead slightly with its more effective management of resources.

Secure Operations

Tekton and Argo do not compromise on security within any CI/CD pipeline. Tekton equips itself with a robust security blueprint, incorporating role-based access control and secret management tools. On the opposing end, Argo comes armed with an all-inclusive security scheme that encompasses encryption, access regulation, and audit trails.

Practical Implementations

In the domain of practical applications, both Tekton and Argo outshine in various sectors. Tekton demonstrates its use in realms like software creation and IT services, while Argo shines in sectors like financial services, health care, and technology.

Summing up, both Tekton and Argo display formidable capabilities in architecting cloud-centric CI/CD pipelines. Therefore, the selection between Tekton and Argo will be dictated by your distinct prerequisites. Should you emphasize intuitive use and resource efficiency, Tekton may be more suitable. Conversely, if your focus is a deep-seated security framework while being prepared to tackle a slightly complicated interface, Argo could be your preference. Before you finalize your decision, ensure a comprehensive assessment of both platforms.

Looking Ahead: The Future of Tekton and Argo Pipelines

Delving into the forthcoming pathway and prospects of Tekton and Argo pipelines, a pattern of constant evolution emerges. This natural advancement responds to ever fluctuating needs within the Cloud-Based Continuous Integration and Continuous Delivery (CI/CD) pipelines. Tekton and Argo carry a notable significance in this area.

Tekton: Setting Sights on Extensibility and Seamless Integration

Tekton blazes forward with an unwavering focus on magnifying its extensibility and seamless integration ability. The fervor of the Tekton specialists is directed towards diversifying its functionality to facilitate intricate pipeline architectures and processes. This entails the conception of innovative Custom Resource Definitions (CRDs) which introduce a more refined level of control for pipeline operations and administration.

Simultaneously, Tekton is making strides to bolster its compatibility with other notable tools in the CI/CD landscape. This encompasses improved association with version control utilities, artifact depositories, and deployment applications. Such strides aim to enhance Tekton's versatility, positioning it as a tool that can smoothly navigate any CI/CD process.

Argo: Carving a Path through Automation and Scalable Designs

Moving in a slightly different direction, Argo emerges, with a propulsion towards a future centred around automation and scalable operations. The Argo maestros are emphasising features that delegate more pipeline creation and management to automated systems. This involves the crafting of machine learning algorithms that can fine-tune pipeline performance and strategic resource allocation.

From a scalability standpoint, Argo puts great effort into enhancing its capacity to manage large-scale, intricate processes. This involves building up its clustering potential and refining its performance during taxing loads. As such, the goal for Argo is to exist as a tool that can grow proportionally to the requirements of substantial corporations and high-speed development teams.

Comparison of Anticipated Trajectories

Prospects on the Horizon

Foreseeing a bright horizon for both Tekton and Argo in the global landscape of Cloud-Based CI/CD pipelines, the tools are embarking on a journey to become more potent, versatile, and adaptable entities.

Yet, the ascendancy of these tools also hinges on their ability to evolve with the shifting demands of DevOps groups. As fluid as the CI/CD landscape is, Tekton and Argo must maintain synchronisation with emerging trends and revolutionary technologies to secure their place in the ranks.

The imminent prospect of Tekton and Argo pipelines appears to be illustrious with plans to become more inscribed parts of the CI/CD network. Offering DevOps teams amplified flexibility, dominance, and adaptable capabilities in managing their pipelines, the voyage ahead certainly excites. The thrilling evolution of these tools is bound to drastically influence Cloud-Based CI/CD pipelines.

Conclusion: Which Cloud Native CI/CD Pipeline is Best for You?

When delving into the world of CI/CD pipelines designed for Cloud Native applications, Tekton and Argo both emerge as strong competitors. Each offer a unique blend of characteristics and functionalities, specifically designed to cater to varying needs and preferences. The decision between these two depends greatly on the distinct specifications of your project, the proficiency of your team, and the breadth of your project.

Tekton: A sturdy, flexible solution

Tekton excels in its robust and adaptable nature. It houses an exhaustive catalogue of features to manoeuvre complex operations. It's engineered to be sectional and stretchable in nature, providing you the freedom to mould your pipelines according to your specifications. Furthermore, as Tekton's potency is integrated with the prowess of Kubernetes, you can utilize Kubernetes orchestration capabilities to tactfully oversee your pipelines.

In a team where Kubernetes knowledge is already prevalent, Tekton's compatibility becomes noteworthy. As its syntax and paradigms resonate with Kubernetes, it eases the learning curve for your team. Additionally, Tekton allows a high magnitude of personal adjustments, letting you specifically design your pipelines.

Argo: A straightforward, intuitive alternative

Argo presents its forte in its simplicity and ease-of-use. It takes a simplified route to CI/CD, which commends it as a viable choice for teams making their initial foray into Cloud Native development or for teams who favor a less complicated methodology. Argo's user interface is uncomplicated and easy to work around, consequently providing a smoother operation of managing and navigating through your pipelines.

Additionally, Argo provides a substantial amount of flexibility, enabling alterations to your pipelines as needed. Its affinity for GitOps workflows gives it an upper hand if your team leans towards this CI/CD approach.

Evaluating Performance, Scalability, and Security Metrics

Regarding operational performance, Tekton and Argo ensure stable and effectual execution of pipelines. However, the impact may differ based on your specific use case and infrastructure.

For large-scale operations, Tekton and Argo hold an impressive resume. Both harness Kubernetes' scalability traits, leading to effortless scaling operations for your pipelines as your requirements increase.

In the security domain, Tekton and Argo forward a robust security armor. Both support RBAC (Role-Based Access Control), enabling you to manage permissions for pipeline access and alterations. In addition, they embrace secure communication protocols, corroborating the safety of your data during transfer.

Deciding between Tekton and Argo

To encapsulate, both Argo and Tekton prove to be commendable for cloud native CI/CD pipeline deployment. Your preference between the two will lean towards your exclusive needs and circumstances.

If you value durability, adaptivity, and seamless Kubernetes integration, Tekton is an appropriate choice. If a more candid, user-accomodating tool with apex GitOps workflow support is your preference, Argo is a suitable choice.

Remember, the optimum tool is one that best aligns with your needs. Hence, thoroughly evaluate Tekton and Argo within the parameters of your specific project in order to make a well-informed decision.

FAQ

Subscribe for the latest news

.jpeg)